| Columns Retired Columns & Blogs |

The Highs & Lows of Double-Blind Testing Page 11

The Double-Blind Debate #2

Editor: I am writing these words of response to the brouhaha over double-blind testing as a consumer and cover-to-cover reader of Stereophile.

Editor: I am writing these words of response to the brouhaha over double-blind testing as a consumer and cover-to-cover reader of Stereophile.

After dulling my brain on all the statistical volleying (I've lost track of who's on which side), it became clear that the real argument was whether or not there is a significantly audible difference between different makes of hi-fi equipment. Each side used "scientific conclusions" regarding double-blind testing to support a subterranean contention.

I put forward these reasons why, regardless of statistical accuracy or lack of it, double-blind testing is of limited importance:

(1) The fact that an argument about audible differences between equipment exists at all means that some people think they hear a difference. I know that if I were to hear such a difference and some skeptic were to indicate otherwise through a double-blind test in which I participated, I'd have to choose between accepting the fact that I was out of my mind or be convinced that the test was invalid. I would certainly care little about statistics when making such a decision.

(2) Double-blind testing is itself blind to the quality of the difference, which is what reviewing is all about and is why I read your excellent magazine. (By the way, I have selected Stereophile as my favorite based on my own carefully controlled, statistically significant double-blind test.)

(3) Any external variables, ie, additional components, reflected sound, will only mask real differences.

(4) This type of testing is even more cumbersome than Mr. Leventhal indicates: a preamplifier would have to be tested through its MM phono, MC phono, low Z, monitor, etc. inputs.

(5) Even if double-blind testing were to indicate a highly probably but slight audible difference between two pieces of equipment among a group of listeners, it may not result in any preference for one over the other among the same group of listeners. In a head-on comparison test, this would mean results would be based on total value, and render the double-blind test meaningless despite its technically positive result.

(6) Other parameters need to be considered in parallel with comparative differences in sound. A few months ago I purchased a new receiver. I had a specific amount of money to spend and a small list of features I had to have. I can assure you I didn't need double-blind testing to eliminate almost everything after a few minutes of listening. My final contenders were by Denon and NAD. Again, I did not need double-blind testing to know that, at moderate listening levels, using demo material supplied by the store, I could not hear any difference. But when I started to turn the volume up the NAD simply could not keep up with the Denon.

There may be flaws in my arguments, but the point is that in the real world, where the buying and selling of hi-fi is the final concern for everybody involved, double-blind testing is both impractical and irrelevant. We all have to live with our ears, whether what they hear is real or imagined. As I finish this I am listening to a very nice new release of Vivaldi's concerti. My brain is doing a complex integration of dollars spent with resulting sound, and I am happy.—Dave Werner, Medford, MA

The Double-Blind Debate #3

Editor: Les Leventhal's "Type 1 and Type 2 Errors in the Statistical Analysis of Listening Tests" (JAES, Vol.34 No.6) caused me to revisit experimental design and statistical analysis. Dr. Leventhal offers good basic statistical advice, but falls into a trap I've often found myself in. It's easy to forget that an experiment is valid based on its design, and that statistics only report on the reliability of the results. I also believe that the binomial parameter p, which the author uses as an analog for listener sensitivity, is quite high relative to the position established by the audiophile camp and in actual practice. This renders the author's conclusions about fairness moot.

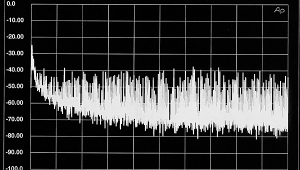

Experiments are made valid (ie, measure what they claim to measure) by good design, not by statistical analysis. The perfect experiment would be completely free of bias, perfectly sensitive to the variable under test, and would require only one trial. However, the experimenter, after conducting such an experiment, might be uncertain that his method was perfect so he repeats it just to be sure. Then, through statistical analysis, the probability of chance results (Type 1 error) or insensitivity (Type 2 error) can be determined. Note that even with one trial the results are valid. 1000 or 1,000,000 trials more do not increase the validity of the work. However, the reliability increases with more trials as does confidence that the results are true.

- Log in or register to post comments