| Columns Retired Columns & Blogs |

A Question of Bits Page 2

For still more efficient coding, linear PCM can be replaced by a "floating-point" code in which leading zeros are eliminated by repetitive division. (The linear PCM codes in a single CD contain more than a billion leading zeros, in small signals and wherever a large waveform crosses the zero-voltage axis.) A small number such as 0.00000001 in decimal arithmetic becomes "1E-8" in floating-point form; the number after the E tells the decoder how many places the decimal point was moved to the left.

With typical program material, lossless coding can cut the bit-rate by at least a factor of two. Thus CD audio can be represented by an average of no more than eight bits per sample, with absolutely no loss of sound quality. For still greater compression, however, lossless coding must be combined with some form of lossy coding. In audio and video that usually means "perceptual" coding—removing bits not by a purely mathematical scheme but using rules based on the limits of human perception,

Whose limits? Which humans? The point of perceptual coding is to avoid wasting bits recording parts of the signal that you won't see or hear anyway. So the question is not whether it alters the signal—it certainly does—but by how much, judged by whether the alterations are audible or visible. The usual aim is that impairments won't be detected by most people, most of the time, with most program material, via most playback systems.

With any perceptual coding system, it is a certainty that some critical listener or viewer somewhere, using carefully selected test signals and the best playback systems, will be able to detect some impairment. That is regarded as inevitable and appropriate, since there is no economic reason to design a mass-production consumer format to satisfy only a tiny minority. In an ideal world a signal-delivery format might be found that would satisfy critical listeners without costing more than a compromised system. The CD is as close to that goal as we're going to get.

The greater the compression ratio, the greater the likelihood that significant numbers of people will notice its effects, at least with some program material. In choosing a compression system, the challenge for the manufacturer (or national standards organization) is to find the optimum cost/benefit tradeoff, where "cost" refers to signal impairments as well as money. If even the most acute critics cannot detect any impairment, or are able to detect a loss only by using weird and unrealistic test signals, the designers may be subject to criticism for not providing enough benefit. By accepting more impairments, they could have achieved a greater reduction in bandwidth, size, or price.

One of the costs of perceptual coding is the complexity of the circuitry involved. It is vastly more complicated than the linear PCM system used for CDs. In CD recording, every 22 microseconds (µs) a quantizer selects a single binary code to represent the amplitude of the entire audio signal. In the PASC encoder used for DCC the coding process involves many more steps, all of which must be executed very quickly. The incoming signal is split into 32 filter bands; the signal level in each band is measured and compared with the threshold of hearing; the threshold in each band has to be computed since it is affected by the signal levels in adjacent bands; then the signal in each band is requantized by a system that allocates more bits to bands containing strong signals and fewer bits (or none) to bands where the signal is below the momentary threshold. With so many decisions to be made, a perceptual coder must be a fast and powerful computer.

The ability to do this is a direct result of the rapid growth and declining cost of computing power in the form of integrated-circuit microchips. The accelerating speed of the microchip revolution was illustrated by a survey in the May issue of Sky and Telescope. It has become a clich;ae to observe that today's laptop computers have more speed and data-processing power than the room-size IBM System 360 computers that, as recently as 1970, were found only in the million-dollar government science labs where I worked in those days. Microchip power is growing so rapidly that current PCs outperform even big VAX and Cray supercomputers of the mid-'80s. For example, here are the times required by several computers to do a particular series of calculations:

Apple II: several minutes

IBM PC-AT: 2.0 seconds

Cray X-MP/24: 0.75 second

486-based PC: 0.16 second

In the 1970s, when linear-PCM recording was adopted for both studio recorders and the consumer CD, designers believed that computers fast enough to do audio and video compression in "real time" would not be practical until sometime in the next century. Thanks to the acceleration of digital processing power and speed seen here, wide-range perceptual coding arrived before the CD's tenth anniversary.

Nor has this process leveled off: Intel will launch the 586 ("Pentium") series of microprocessors this year, the 686 series is in development, and comparable advances in DSP chips are coming from other manufacturers such as Motorola and Texas Instruments. For this reason, one of the most hopeful trends in compression is the use of "embedded-key" systems whose recordings contain both the signal and the rules for decoding. With this approach, which Philips adopted for the DCC, the coding logic can be refined as chips continue to improve (and as designers learn more about listener perception), and existing players will adapt to the "smarter" rules embedded in future recordings.

Following is a brief summary of related audio and video developments, some of which were reported at the April NAB convention.

Dolby AC-2A

Dolby's AC-2 low-bit signal-transmission system, described here last December, was developed for satellite relays of networked radio programs, and is also being used to record film soundtracks. Lucasfilm has editing studios at Skywalker Ranch (near San Francisco) and in Los Angeles, 500 miles to the south. They are tied together by a long-distance T1 digital phone line leased from AT&T, and AC-2 coding is used to send soundtracks back and forth between the studios. For example, the sound of Backdraft was edited at Skywalker Ranch while director Ron Howard listened in LA. Arnold Schwarzenegger spoke dialog for Terminator 2 into a microphone in LA and was recorded at the ranch.

AC-2 is not one circuit but a family of Dolby compressed-audio coders, with bit rates varying from 192 kilobits/second per audio channel (for applications requiring maximum fidelity) down to 64 kb/s/ch for noncritical uses such as spoken voice. The 192kb/s version is said to be sufficiently free of artifacts that it can be used successfully in hard-disk editing workstations, where signals may be subjected to multiple cycles of encoding and decoding during a mixdown. (Reportedly, after five code/decode cycles, 192kb/s AC-2 is not noticeably impaired when compared to a 16-bit linear PCM source.) At the NAB convention Dolby introduced a digital studio-to-transmitter link for FM stations, using AC-2 coding. It promises much better sound than the equalized telephone lines that most stations have used in the past, at lower cost than the full-bandwidth digital microwave STLs that a few stations use.

The newest version of AC-2, called AC-2A, promises still better sound with no increase in bit-rate. In the design of any digital compression system a difficult compromise must be made between filter resolution and speed. Filters should be narrower than the "masking" curve of the human ear, to ensure that listeners won't hear modulation noise or distortion during continuous tones. But the laws of nature impose a limit: narrow-band filters have long time-constants, preventing the filtered signal from changing rapidly in amplitude. In demonstrations of several low-bit coding systems at last fall's Audio Engineering Society convention, gross distortion was audible in transients (glockenspiel and the syllables of human speech). The effect sounded like a severely overloaded amplifier that was clipping only transient waveforms.

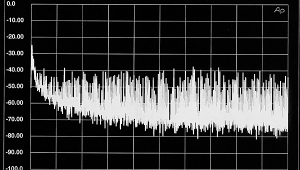

AC-2A overcomes this obstacle by not having a predetermined set of filters. Since the filtering is performed in the digital domain anyway, the computer that does the coding can change the filters in response to the character of the incoming signal. Every 1/100 second the computer selects a different set of filter bandwidths and associated time-constants—up to 40 narrow and therefore slow bands for continuous signals; an alternate group of 15 wider, faster bands for transients; or an intermediate "transition" group. Within each band the filtered signal is requantized using a "floating-point" coder whose quantizing step size varies with the size of the signal itself, coarse for large signals and fine for small ones.

- Log in or register to post comments