| Columns Retired Columns & Blogs |

The Pepsi Challenge (or taste test) is still the gold standard!

Get off my lawn!

Our disappointment in the quality of new luxury goods vs old luxury goods is hardly the stuff of moral outrage. It's not a big deal. We make do. We can still find the old stuff if we really want it, assuming we can afford it. (That's why they're called luxuries, I suppose.) The objectivist engineers whom we hold responsible are simply doing their jobs in the manner in which they were trained, and many are music-lovers in their own right. And the skeptics who cheer them on . . . well, some of them are nice people, too. (Indeed, the best dentist I ever had, who sadly moved away from our area a few years ago, was a dedicated skeptic—and a thoroughly wonderful, intelligent, delightful, kindhearted human being.)

The trouble is, many of the loudest people in the skeptic community, by their own admission, appear to be less interested in investigating seemingly anomalous events with fairness and an open mind than in shooting down everything that strikes them as "woo-woo," foisted on innocent consumers by "flimflam artists" and "fruitcakes." (As you may have noticed, skeptic slang appears to be frozen in an era when Paul Lynde and Arte Johnson ruled the airwaves.) Many of those who would protect us from "pseudoscience" have themselves become "pseudoskeptics" (footnote 5): defenders of a strict point of view rather than unbiased seekers of truth. And it is telling that they care more about you and I being duped by cable manufacturers—and astrologers, and bigfoot researchers, and mentalists—than they do about being themselves duped by the "professional" skeptics whose livelihoods depend on a endless supply of outrage.

And make no mistake, if the debate between the pro- and anti-blind-testing factions ended tonight—poof, gone!— Stereophile would carry on as if nothing had happened: We would continue to produce, each month, a magazine of quality and integrity, reviewing products in the manner we know to have the greatest relevance to the reason for those products' existence. But if the debate ended tonight, so too would Skeptic and Skeptical Inquirer magazines, the James Randi Educational Foundation, and any number of individuals and entities that require a steady stream of donations, which themselves require a similarly steady stream of discordant debate.

That speaks to another unattractive characteristic of the dumber skeptics in the audio trenches: While audio perfectionists tend not to care what's bought and used by people on the other side of the fence, advocates of blind testing are obviously driven apoplectic by the very notion that someone else is enjoying domestic playback in a manner that differs from their own. Which is sad, really. On behalf of all fans of perfectionist equipment, I say, in the spirit of brotherly love: Guys, knock yourselves out. If laboratory-grade "proof" is something you require before parting with your money, then please, by all means, keep your money tight in your hands. We wish you well, and we look forward to seeing you in the afterlife, where all talk will be of music, not of sound.

Though it appears that the most contentious skeptics are unlikely to take heed, I urge them: Please disregard any product, or any genre of product, if its manufacturer is unable to produce a reason for buying with which you are satisfied. Buy what you want. Don't buy what you don't want. Enjoy recorded music in whatever manner suits you best. Believe what you believe—but for God's sake, keep an open mind. And if you can't manage that, then at least stop worrying so damn much about the way the hobby is approached by people who aren't you. Your need for mathematical validation, like your name-calling, your hysteria, and your propensity for using pictures of robots as your chatsite avatars, makes you look like a bunch of angry, jealous, socially inept losers. No offense.

New from Schick

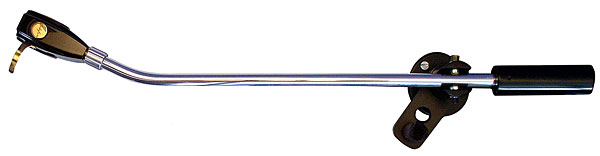

It was in our March 2010 issue that I first wrote about the enduringly recommendable Thomas Schick tonearm: at $1675, the highest-value 12" tonearm I have ever tried (footnote 6). I bought and kept my review sample, which has since seen use with a wide range of EMT and Ortofon pickup heads: integrated combinations of cartridge and headshell in which the former element is generally a low-compliance type and the latter is suitably high in mass.

It hasn't always been easy for Schick owners to get good results with standard-mount cartridges: The arm doesn't come with a headshell, forcing users to choose from the limited selection of same available on the new and used markets. Among the most popular of the current-production headshells—and my choice for the past four years—is the Yamamoto HS-1A, which is made of ebony, and which one sees advertised at prices ranging from $82 to $98. But the Yamamoto weighs only 8.5gm (sans finger lift), which on the face of it would seem too light to be compatible with a low-compliance cartridge.

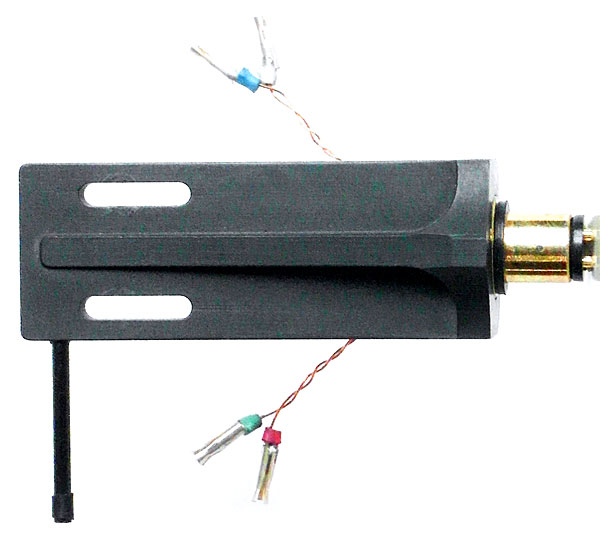

Finally, in July 2014, Thomas Schick began filling orders for the proprietary headshell he unveiled in May at the High End Show, in Munich. The Schick headshell (€249) is no less adjustable than the Yamamoto, yet its 15.2gm mass is far more suited to the sorts of phono cartridges for which, I dare suggest, one buys an extra-long (and thus extra-massive) tonearm in the first place.

The Schick headshell is made from "technical" graphite, soaked with a resin that damps the material's inherent brightness and allows the headshell to be handled without smudging the user's fingers. The design, in which an integral ridge is used for torsional rigidity, is attractively simple. Signal leads are solid copper, apparently with a clear coating for insulation. A setscrew for azimuth adjustment is located on the headshell's inboard side—"for a cleaner look," Schick says.

Because my own Schick headshell arrived only a couple of weeks before this issue's deadline, I've so far had only limited experience of it. But that experience—with my Miyabi 47 (9.27gm) and Miyajima Premium BE Mono (13.6gm) cartridges—has been thoroughly positive. The most obvious differences between the Yamamoto and the Schick are the latter's far tighter, cleaner bass and, remarkably, the manner in which cartridges mounted in the Schick suffer less breakup during heavily modulated passages. Both qualities, of course, are among the things one might expect from a phonograph whose combination of tonearm and cartridge exhibits a more optimal resonant frequency.

€249 is surely more than I'm used to spending on a headshell—my sample isn't a freebie or a loaner—and the price may be a bridge too far for some hobbyists. But, as Thomas Schick writes from his new workshop in Liebenwalde, about 24 miles north of Berlin, graphite is extremely difficult to machine; consequently, graphite parts are very expensive. Incidentally, the company that machines the graphite for Schick also makes graphite parts for a German manufacturer of vacuum tubes—something that Schick didn't realize when he first approached the company with his idea for a headshell. "When I was trying to explain to the owner what a headshell actually is, he simply said, 'Yeah, sure—like for tube audio?' I was startled, but I knew I was at the right place."

Footnote 5: This term was coined by one of their own: Marcello Truzzi, a founder of the Committee for Skeptical Inquiry, who quit that organization out of disgust with his colleagues' habit of "moving the goalposts" whenever it appeared they might be losing a battle with someone who wished to validate a seemingly anomalous finding.

Footnote 6: Thomas Schick, Dorfallee 47, 16559 Liebenwalde, Germany. Tel: (49) (33054) 69-36-38. Web: www.schick-liebenthal.de.

The Pepsi Challenge (or taste test) is still the gold standard!

Talk about wrong on an epic scale.

The entire argument is based on false equivalences and just plain falsehoods.

There is absolutely nothing inherent in blind listening that demands listening only in snippets, make snap judgements, determine "which seems more real," or anything of the sort.

Why do such lies continue to be perpetuated? Is it out of ignorance or is it done intentionally?

There is absolutely nothing inherent in blind listening that demands listening only in snippets, make snap judgements, determine "which seems more real," or anything of the sort.

No there isn't, But the fact remains that all published tests that have "proved" that cables, amplifiers, digital players etc "sound the same" have been performed in exactly this "sip test" manner. As I wrote 20 years ago after having taken part as a listener in some such tests (organized by others), "blind testing is the last refuge of the agenda-driven scoundrel."

John Atkinson

Editor, Stereophile

No there isn't.

Then the article should not have been published, because it leads the reader to believe that there is.

But the fact remains that all published tests that have "proved" that cables, amplifiers, digital players etc "sound the same" have been performed in exactly this "sip test" manner.

Fine. Then speak to those tests. Don't use them to broad brush the entirety of blind testing.

As I wrote 20 years ago after having taken part as a listener in some such tests (organized by others), "blind testing is the last refuge of the agenda-driven scoundrel."

And what's your last refuge, John? It appears to be resorting to the same sort of misinformative broad brush tactics that are routinely employed by the racist and the bigoted. I guess that's ok as long as it's being directed at a testing protocol and not at human beings? Because I don't think you'd let that fly if the discussion were centered around race or religion.

Then the article should not have been published, because it leads the reader to believe that there is.

I don't think it unfair to publish an article that correctly points to a major flaw of blind tests as typically practiced because the Platonic ideal of such testing doesn't suffer from such a flaw.

As I wrote 20 years ago after having taken part as a listener in some such tests (organized by others), "blind testing is the last refuge of the agenda-driven scoundrel."And what's your last refuge, John? It appears to be resorting to the same sort of misinformative broad brush tactics that are routinely employed by the racist and the bigoted.

Misinformation? There are many ways for an incompetent, naive, or dishonest tester to arrange for null results to be produced by a blind test, even when a small but real audible difference exists. (Remember, any tendency to randomize the listeners' scoring will reduce the statistical significance of the result.) I have witnessed all the following examples of such gamesmanship by others running blind tests, the results of all of which have been proclaimed as "proving" that there were no audible difference:

First, do not allow the listeners sufficient (or any) time to become familiar with the room and system. Second, do not subject the listeners to any training. Third, do not test the proposed test methodology's sensitivity to real but small differences. And fourth, and probably most importantly in an ABX test, withhold the switch from the test subjects. If the tester then switches between A and B far too quickly, allowing only very brief exposures to X, he can produce a null result between components that actually sound _very_ different. Here are some other tricks I have witnessed being practiced by "agenda-driven scoundrel" testers to achieve a false null result:

Misidentify what the listeners are hearing so that they start to question what they are hearing.

Introduce arbitrary and unexpected delays in auditioning A, B, or X.

Stop the tests after a couple of presentations, ostensibly to "check" something but actually to change something else when the tests resume. Or merely to introduce a long enough delay to confuse the listeners.

Make noises whenever X is being auditioned. For example, in one infamous AES test that has since been quoted as "proving" cables sound the same, the sound of the test speakers was being picked up by the presenter's podium mike. The PA sound was louder than the test sound for many of the subjects. See www.stereophile.com/features/107/index.html for more details.

Arrange for there to be interfering noise from adjacent rooms or even, as in the late 1990s SDMI tests in London on watermarking, use a PC with a hard drive and fan louder than some of the passages of music,.

Humiliate or confuse the test subjects. Or tell them that their individual results will be made public.

Insist on continuing the test long past the point where listener fatigue has set in. (AES papers have shown that good listeners have a window of only about 45 minutes where they can produce reliable results.)

Use inappropriate source material. For example, if there exists a real difference in the DUTs' low-frequency performance, use piccolo recordings.

These will all randomize the test subjects' responses _even if a readily audible difference exists between the devices under test really exists_.

And if the test still produces identification result, you can discard the positive scores or do some other data cooking in the subsequent analysis. For example, at some 1990 AES tests on surround-sound decoders, the highest and lowest-scoring devices, with statistically significant identification, were two Dolby Pro-Logic decoders. The tester rejected the identification in the final analysis, and combined the scores for these two devices. He ended up with null results overall, which were presented as showing that Dolby Pro-Logic did not produce an improvement in surround reproduction.

Or you limit the trials to a small enough number so that even if a listener achieves a perfect score, that is still insufficient to reach the level of statistical significance deemed necessary. This was done in the 1988 AES tests on amplifiers and cables, where each listener was limited to 5 trials. As 5 correct out of 5 does not reach the 95% confidence level, the tester felt justified in proclaiming that the listeners who did score 5 out of 5 were still "guessing."

Or, even when the results in incontrovertible, continue to claim for years, even decades, after the event that the opposite was the case. This happened with some ABX tests I performed in 1984 where I could identify an inversion absolute phase to a very high degree of statistical significance (<1% that the result was due to chance). 20 years later, one "objectivist" was still claiming the opposite in on-line forums.

So yes, "scoundrels" is an appropriate word to use for these organizers of these so-called "scientific tests."

John Atkinson

Editor, Stereophile

I don't think it unfair to publish an article that correctly points to a major flaw of blind tests as typically practiced because the Platonic ideal of such testing don't suffer from such a flaw.

But the article doesn't point to a major flaw. The whole Pepsi Challenge example is completely irrelevant. As I said below, the Pepsi Challenge wasn't a difference test, it was a preference test. Nor did it show that the "sip test" precluded anyone from determining that there was a difference between Pepsi and Coke. That's because that was never the purpose of the Pepsi Challenge. I'm not aware of anyone claiming that Coke and Pepsi taste the same. With the Pepsi Challenge, that was a given. The question was what do people prefer when they don't know which one they are drinking.

Bottom line, the Pepsi Challenge is wholly irrelevant to the issue of whether or not there are actual audible differences between components or cables and I hope I never see it mentioned in Stereophile again.

Misinformation? There are many ways for an incompetent, naive, or dishonest tester to arrange for null results to be produced by a blind test, even when a small but real audible difference exists.

Sure. But when you use examples of that to paint a broad brush condemnation of the whole of blind testing, you are being misinformative. Art's article isn't about people conducting blind testing poorly, it's saying that blind testing itself is wrong. Didn't you bother to read it? "Time and again, the use of blind testing in an attempt to quantify human perception has been discredited..." That's not a condemnation of the people who have used blind testing poorly, it's a condemnation of blind testing itself. And then he proceeds to use an example that's not even relevant to the context of the Great Audio Debate.

The rest of your post is examples of people doing a poor job of conducting blind tests. And I really don't have much to argue with the examples you've given. But that's a people problem, not any inherent problem with blind testing. Address those people and those tests. Otherwise, what you're doing is tantamount to using a few bad actors to condemn an entire race of people. If it's not okay to do that with people, I see no reason for it to be okay with a testing protocol. Both instances are just as irrational and bigoted.

And if you're so dissatisfied with how some people have conducted blind tests, why not use some of Stereophile's resources to do it right, instead of just bitching about it and misinforming people with articles like this one? I mean, if you have no interest in doing that, then what's the point of bitching about it? Either you have an interest in determining whether or not there are any actual audible differences or you don't. And if you don't, then why would you care about poorly conducted blind tests?

I don't think blind testing in the comfortable surroundings of the home and over an extended period of time is a bad idea. Maybe the above poster would volunteer his time toward the effort? Art could put you up in the spare bedroom for a couple of months.

Actually the late Tom Nousaine set up a number of people with ABX systems in their own homes so they could test whenever they wanted to, for as long as they wanted to and using their own system.

" Are we disappointed when an otherwise good electronics manufacturer lowers its manufacturing costs by switching from hand-wired circuits to PCB construction, because the company was persuaded that "that doesn't make any difference"?"

Good Lord, please spare us this baloney. Hand wired circuits? Other than pulling out a bunch of myths that have no backing in fact, please explain what advantage there is to hand wiring over pcb's. Never mind that no two hand wired circuits are ever the same. Or that your wiring paths are often longer and your chances for joint failure are far greater. Same goes for crosstalk, hum induction, ground loops, etc.

Of course hand wiring isn't even an option for most transistor circuits, so I guess we're mired in the wonderful world of 1930's amplifier technology too.

"Are we disappointed when a manufacturer of classic loudspeakers begins making cabinets out of MDF instead of plywood, because an engineer convinced the company that "that doesn't make any difference"?"

How about the entirely plausible explanation that MDF is actually BETTER than plywood from a rigidity and self damping perspective, and you can make better cabinets by using it?

Other than manufacturers testing variations of their own products, no one can, has ever done, or will ever do DBT's of audio equipment that shows any significant results, "scientifically", statistically, or otherwise that will be meaningful to the average consumer, let alone to "hardcore" audiophiles.

John Atkinson knows so... So does anyone who understands research methodology.

Articulate and entertaining as always.

I concur that dbt is meaningless as applied to home audio gear. The audio hobby is, by definition, an aural experience; there can be no greater arbiter than my own ears in choosing gear for myself.

If others wish to be guided by numbers on paper, may they be happy in their way as I am in mine.

There's no arguing subjective preference. It is what it is regardless of the reasons behind it.

The problem comes from the fact that many self-professed "subjectivists" simply aren't happy being subjectivists. If they were, they wouldn't give a shit about the objective side of things and wouldn't waste any time railing against it. Instead, they try playing both sides of the street and attempt to try and pass off their subjective experiences as something more than that.

If there were more true subjectivists out there, there wouldn't be anything to argue about. They wouldn't attempt to pass off their subjective experiences as anything more than that and no objectivist would have any legitimate argument to make against their subjective experiences.

Subjectivism and objectivism can peacefully co-exist. But there are stone-throwers on both sides who seem intent on not allowing that to happen.

Well said Sir

Analog is dying , it's death-rattle can be mistaken for Stone Throwing , we can Blind test it ! , if you dare .

Tony in Michigan

Well said Sir

Analog is dying , it's death-rattle can be mistaken for Stone Throwing , we can Blind test it ! , if you dare .

Tony in Michigan

Dear Mr. JA & AD ,

It seems a debate about this matter is rather pointless , the entire World went Digital .

I speak as someone that owned more Koetsu Carts than most people own Interconnects , I was a Importer of British stuff in the 1970s , Esoteric Audio in the 1980s ( retail ) and manufacture of OEM stuff for High-End as HP would call our Industry .

The Audio Hobbyists that own thousands of Vinyl ( Todd the Vinyl Junkie with 12,000 being a typical example ) cling and support this old storage medium , a few newbies are trying out the Garage Sale $1.00 Vinyls on Roy Hall's stuff , maybe buying a Schiit head Amp thing for $120.00 , too bad ? , maybe but there it is ! , you can blind test that and get the consistent result of seeing people walking down any street anywhere on the Planet with white wires hanging from their ears , it's obvious gentlemen : the marketplace listened and decided , perhaps blindly but overwhelmingly .

Vinyl still has a nucleus , East Coast , NY , probably Brooklyn or thereabouts . There , a person can live vinyl and feel at home , somewhat at home , at least able to find kindred spirits . Vinyl is becoming a Cult now , probably little more that a historical artifact for the balance of the Population ( 99.9999% or greater ) . The Glue that hold it together is that people "Save" their parents Vinyl and 78 Record Collections , for old time sakes and old family memories sake , something like the Photo Albums from yesteryear .

The whole game has changed , something I'm certain you are trying to cope with . HP just passed , his memory is being discussed , his position in the Industry will be filled , vacuums are always filled , most likely by a digital person , I'm reasoning , someone that understands Digital to Analog Converters perhaps your Tyll in the Frozen North , another bright penny is that Darko lad from Down Under , he writes like a Good Brit , very clever with words is he , ( might be a nice addition to your staff ) , does a nice job of kissing up to manufacturers ( advertisers ) .

Well , Gentlemen , Grado make Headphones now , haven't heard of a Grado phono cart mentioned in the last few years ( by anyone ) , kinda says it all . I know people that are going gaga over their 1000 , trying to work out a good amp matching . The Audio Hobby is Alive and exciting but not in Vinyl or Analog segments , sorry , it's over , dead and nearly forgotten .

You may still be right about Vinyl's excellence but you'll be dead right !

Tony in Michigan

Oh , OK , you're right , of course .

I , love doing silly word modifications , from time to time .

I accept your wise council .

Tony in Michigan

I'm not one of those awful objectivists, but even I can see that this is a lazy opinion piece which resorts to diatribe and unconvincing analogies to prosecute a flawed argument.

Debate is great, but the article struck me as angry and defensive. Mostly, I thought there was a whiff of hypocrisy in this statement:

"While audio perfectionists tend not to care what's bought and used by people on the other side of the fence, advocates of blind testing are obviously driven apoplectic by the very notion that someone else is enjoying domestic playback in a manner that differs from their own."

Not really.

I've seen clever "set-ups" lead people , a blind test can be trickery , those Magic Show people in Vegas fool people all the time .

However , I used to do blind tests in my Audio Salon , comparing all my small monitors . I used Electrocompaniet , VPI , Koetsu and MIT 750 wire . We blind tested : Pro-Ac Tablettes , LS3/5a , Celestian SL 600s ( the Alum version ) , Quad ESL 63s , the little Kindels , and other small monitor anyone brought in . Consistent result was the Pro-Ac Tablette , every time . We even had customers perform the test for other costumers , same result . Tablettes were outstanding back then , they may still be , wouldn't know , I gone to Active Genelecs , would be a Meridian M2 Active owner it they still made em .

I guess that Blind testing can be a starting point , time reveals all , other considerations come into play and anyone can be fooled or tricked , but Blind Testing does help undecideds with little patience or self-assuredness make an informed ( kinda informed ) quick decision .

It is a safe position to worry about the results of a Blind Test , a person that's been burned will tend to deny the usefulness of it but we all are trying to eliminate our emotions from a scientific decision , Blind can help with that .

Tony in Michigan

Single blind testing can be fun and informative, but the emphasis by some on double blind testing is misguided and misplaced!

It all depends on what you're after.

If you're looking to determine actual audible differences, then you need to control for the well known limitations of purely subjective listening. This can't be done by way of ego, vanity or sheer willpower.

If you simply want to enjoy reproduced music, then there's no need for any sort of blind testing. Go with whatever gives you the greatest pleasure and enjoyment. Period. Full stop.

If you're truly a subjectivist, that's all that should matter and you don't worry about what anybody else says.

The informative...one may actually hear the difference between different lengths of the same wire and prefer the sound of a specific length.

The fun...you might actually prefer the sound of your parents' Pioneer receiver over the Krell monster amp!!

You never know.

But you are right, Steve. Just enjoy! And have fun!

"To judge the authenticity, the effectiveness, the legitimacy of any work of art requires three things: a participant who is familiar with the work(s) at hand; a test setting that's appropriate to the appreciation of art; and a generous amount of time for the participant to arrive, without stress, at his or her conclusions."

This is bullshit. 1) Equipment is not Art. Art can not be assessed on the "usefulness" scale. 2) Art appreciation, Art 'legitimacy' has nothing to do with 'it' not being a forgery. Art appreciation requires nothing of the subject, Assessment of the real Art vs Fake Art is a "guys in white lab coats" thing, This is 'Art' on sterile cellular level. Molecules being compared to other molecules.

Art- seriously, no pun intended.

" 'Coke's problem is that the guys in white lab coats took over.' Took over and got it wrong, I would add. Wrong on an epic scale.'

I wont argue with you there - it's not like back in the day :)

Both of these examples go straight to the false equivalences that make up the "argument."

With audio testing, at least of the sort that's being discussed here, the task of the listener isn't to identify the fake from the original. It's simply to determine if they can reliably detect a difference between two presentations.

But this false equivalency is further reinforced by saying "Notably, those are the same challenges confronted by the listener who wishes to distinguish the sound of one piece of playback gear from that of another: He, too, must examine two samples of the same work of art in an effort to determine which seems more real."

That's complete nonsense. The listener isn't tasked with determining that which "seems more real." Just whether or not there is a difference. Even a layperson could probably look at an original and a forgery and accurately tell you if there's a difference between the two. Being able to tell you which is the forgery is an entirely different matter.

And the Pepsi challenge example is even more egregious. Art says "Time and again, the use of blind testing in an attempt to quantify human perception has been discredited, most recently in the best-selling book Blink, by journalist Malcolm Gladwell."

This is complete bullshit, and it's sad to see Gladwell's work used to make such a bullshit argument.

The Pepsi Challenge wasn't a difference test, it was a preference test. The "sip test" didn't prevent anyone from determining if there was a difference between Pepsi and Coke. That's not even what was being tested for crying out loud! The Great Audio Debate has never been about preference. There's simply no arguing peoples' personal preferences. What, are you going to argue with someone that their favorite color isn't blue?

Gladwell's argument was that the "sip test" didn't necessarily reflect peoples' preferences compared to drinking a whole can or bottle, which is how most people consume soft drinks. It is NOT any sort of discrediting of blind testing as a whole as Art claims it is.

I simply don't see how any rational, thinking person could, in good faith, put forward the arguments that Art did.

This is a sad hypocritical article, and Stereophile should be ashamed to have published it. More engineering shaming as if any of the equipment you so cherish would exist without them.

Art/John, if blind testing is so faulty because it requires snap judgments, why does every subjective review start of with "I heard a dramatic difference immediately" or something similar? Good enough for subjectivism but not actual science?

As always, these arguments are the same made by religious zealots. Ugh.

if blind testing is so faulty because it requires snap judgments, why does every subjective review start of with "I heard a dramatic difference immediately" or something similar?

Every review? Perhaps you are reading a different magazine?

As always, these arguments are the same made by religious zealots.

Speaking for myself, my criticisms of blind testing as typically practiced are based on extensive experience, both as a listener and as an organizer/proctor. It is very difficult to design a blind test where all the variables other than the one in which you are interested are eliminated. And if those variables are not eliminated, the test results are not valid. Just because a test is blind does not make it "scientific." Those who insist that it is are the zealots, sir.

John Atkinson

Editor, Stereophile

John, first of all, I respect you a few other writers here (Kal, Tom Norton, Robert Deutsch) as you do your job with integrity. I have always appreciated that Stereophile attempts to perform high quality measurements of reviewed equipment. I think these results in conjunction with subjective impressions are very useful! However, there is another group of writers here that seem to be at the ready to dismiss engineering and science, as if they would have any components to listen to without those disciplines! It's especially insulting to listen to someone who is obviously not a scientist criticize the scientific method of a test (very poorly, at that).

Every review? Perhaps you are reading a different magazine?

John, come on. If I took the time, I could dig up countless Stereophile reviews that have expressed just those types of sentiments.

Just because a test is blind does not make it "scientific." Those who insist that it is are the zealots, sir.

I agree, anyone saying that objective measures are the be all end all are equally at fault. But this article is the same level of extremity at the other side. Sorry but I think Toole and Voeks know a hell of a lot more about audio design than anyone working at Stereophile and blind testing is a crucial part of their design process. It is a useful tool to be used, not the be all end all.

Furthermore, bias has been extensively proven a hundred times over, John. Whenever a subjectivist discounts such bias, they immediately lose all credibility.

and listening to your equipment under the stress of being tested? No. When these guys conduct the product reviews, they are just trying to act like any consumer would act if they bought the product. Some reviewers are better at this that others. Some show measurements to try to explain the product, along with listening tests.

The problem in this industry is that there are virtually unlimited number of configurations with limitless amounts of content to listen to, our room acoustics differ, and then our listening skills differ.

Trying to conduct ABX tests is just VERY difficult. I do have a solution to figure out a way to make it better or easier to tell.

Play a sound file, take 3D spectrum analysis measurements with each "system" that's being compared. If the only changes are a cable, then if there are any differences, there THEORETICALLY should be a difference in 3D Spectrum analysis, and then it's a matter of figuring out if one can tell which is which and looking at the measurements only and not knowing what equipment produces which measurement. Why is 3D spectrum analysis a good idea? Because music is volatile, and it's measured in terms of rise time, decay, sustain, harmonic structure, etc. etc. etc. and if you look at measurement differences of the same passage, then maybe you can eventually get your listening skills to the point where you can actually notice that difference. I think the audio magazines should investigate this method. But it may require examining a 3 minute passage of a song and comparing them and that's difficult unless you have printouts of two measurements placed on top of each other to see where those changes are.

Remember, every piece of equipment in the audio chain, including cables, IS a filter. We just have to figure out how to see visually how the product or product combination does it's filtering and then getting our listening skills trained to hear those differences.

Bla bla bla is what I hear I my head when reading most of the articles in Stereophile. Keep up the discouraging writing.

Now, in order for you to spend your time and everyone else's time more wisely, then go to another site. That way we don't have to read your Bla Bla Bla comments which has the same impact on others. If I read an article I don't like, then I stop reading it, or I simply let the words go in one ear and out the other and that goes for ANY site/mag.

.

JA - Do you see any signs of future vitality in high-end audio?

JG Holt - Vitality? Don't make me laugh. Audio as a hobby is dying, largely by its own hand. As far as the real world is concerned, high-end audio lost its credibility during the 1980s, when it flatly refused to submit to the kind of basic honesty controls (double-blind testing, for example) that had legitimized every other serious scientific endeavor since Pascal. [This refusal] is a source of endless derisive amusement among rational people and of perpetual embarrassment for me, because I am associated by so many people with the mess my disciples made of spreading my gospel. For the record: I never, ever claimed that measurements don't matter. What I said (and very often, at that) was, they don't always tell the whole story. Not quite the same thing.

JG Holt - "Vitality? Don't make me laugh. Audio as a hobby is dying, largely by its own hand. As far as the real world is concerned, high-end audio lost its credibility during the 1980s, when it flatly refused to submit to the kind of basic honesty controls (double-blind testing, for example) that had legitimized every other serious scientific endeavor since Pascal."

Despite Gordon's statement above, published in our November 2007 issue, see www.stereophile.com/asweseeit/1107awsi/index.html, it is fair to point out that despite his first call for "scientific testing," which was published in June 1981 and is scheduled to to be republished on this site later this week, he almost never performed any blind testing himself. Throughout his reviewing career, until he resigned from Stereophile in 1999, he continued to practice the same sighted listening in his reviews as Art Dudley and all the rest of the magazine's review team.

John Atkinson

Editor, Stereophile

John, the point is not whether JG Holt did/not perform "some" blind testing himself, it's that the **founder**/editor of Stereophile and thus by extension, Stereophiles official position itself, was not "anti-blind" or against basic honesty controls (double-blind testing, for example) that had legitimized every other serious scientific endeavor since Pascal.

I don't have to blind test drugs or orchestra members myself, to be for blind testing of drugs and orchestra members(rather than anti), like any other rational person.

Further, he went on to say this:

Remember those loudspeaker shoot-outs we used to have during our annual writer gatherings in Santa Fe? The frequent occasions when various reviewers would repeatedly choose the same loudspeaker as their favorite (or least-favorite) model? That was all the proof needed that [blind] testing does work, aside from the fact that it's (still) the only honest kind. It also suggested that simple ear training, with DBT confirmation, could have built the kind of listening confidence among talented reviewers that might have made a world of difference in the outcome of high-end audio.

So apparently there was "some" form of blind tests, perhaps casual/unofficial, but I interpret "we" to include you, despite your now rejection of them (and Stereophiles present, official position).

To me, that seems like a shift in Stereophiles position from 15yrs ago, whether or not blind tests were an official policy for review.

DBT has never legitimized any scientific endeavor. You have also over extended yourself by confusing what one person says with what really happens.

Nothing has changed at Stereophile.

John, the point is not whether JG Holt did/not perform "some" blind testing himself, it's that the **founder**/editor of Stereophile and thus by extension, Stereophiles official position itself, was not "anti-blind" or against basic honesty controls (double-blind testing, for example) that had legitimized every other serious scientific endeavor since Pascal.

But if Gordon felt so strongly, as exemplified by the quote from my 2007 interview with him, surely it is relevant to point out that he still didn't feel strongly enough ever to act on that feeling?

Further, he went on to say this: "Remember those loudspeaker shoot-outs we used to have during our annual writer gatherings in Santa Fe?"So apparently there was "some" form of blind tests, perhaps casual/unofficial . . .

These blind tests were neither casual nor unofficial. I designed and organized these tests, along with Tom Norton, and we did several loudspeaker reviews in the mid 1990s using these tests along with the usual sighted tests.

We found that to get meaningful results from blind testing, the test procedure needed to be prolonged and very consuming of both time and resources. So consuming, in fact, that it was not really feasible to implement such testing as routine for reviews. If the blind testing was less rigorous, in the manner described by Art in this essay, then the results became meaningless.

Just because a test is performed blind does not make it "scientific," as you and Gordon appear to suggest. So the choice is between two forms of flawed testing procedures to support reviews.

. . . but I interpret "we" to include you, despite your now rejection of them (and Stereophiles present, official position). To me, that seems like a shift in Stereophiles position from 15yrs ago, whether or not blind tests were an official policy for review.

Gordon founded this magazine using reviews based on sighted listening, and that policy has remained unchanged since 1962. I have tried using blind testing as part of our testing but it turned out to be impracticable when performed in a rigorous manner. We continued, therefore, using the method Gordon intended from the start: careful and conscientious sighted listening. If you feel that this doesn't produce results that can be relied upon, then you are welcome to express that opinion, of course. But doesn't it strike you as strange that the person with experience of blind testing rejects it for equipment reviews and the ones without such experience support it?

John Atkinson

Editor, Stereophile

But if Gordon felt so strongly, as exemplified by the quote from my 2007 interview with him, surely it is relevant to point out that he still didn't feel strongly enough ever to act on that feeling?

No, not unless you wanted to Red herring, possibly ad hominem the argument. Gordon (or anyone else) does not have to carry out blind tests of pharmaceuticals, audio or orchestra members, to accept their validity as a means of testing. The fact remains, per your transcription of his words, he/Stereophile were not anti-blind as an official position during his reign, regardless of official review practices.

We found that to get meaningful results from blind testing, the test procedure needed to be prolonged and very consuming of both time and resources. So consuming, in fact, that it was not really feasible to implement such testing as routine for reviews. If the blind testing was less rigorous, in the manner described by Art in this essay, then the results became meaningless.

Which says ZERO about the efficacy/validity of blind testing in audio, which Stereophile now officially rejects.

Just because a test is performed blind does not make it "scientific," as you and Gordon appear to suggest. So the choice is between two forms of flawed testing procedures to support reviews.

I "appear to suggest" no such thing and I find your impugning of the deceased/unable to speak for themselves deplorable.

Two (flawed testing) wrongs don't make a right.

We continued, therefore, using the method Gordon intended from the start: careful and conscientious sighted listening.

With zero honesty controls, as if humanity doesn't apply to you. IOW, purely anecdotal opinion. Which I highly doubt your honesty controls rejecting readership views it as.

But doesn't it strike you as strange that the person with experience of blind testing rejects it for equipment reviews and the ones without such experience support it?

No, what I find strange is those who reject blinds tests when they fail/produce (undesired) nulls, touting the results of blind tests when they/others pass with (desired) positives, ala a certain AES amp demo and some recent online games posted on AA.

cheers,

AJ

p.s. Amir, JJ, you and I sharing a beer discussing audio would be interesting, to say the least ;-)

Science is hardly ever applied the way you think it should, nor is science always "honest". Take Oxycontin, for example...(pun intended) Where's the "honesty" of science here?

Except perhaps for some manufacturers testing variations of their own equipment, no one in the entire audio industry does DBT.

No one.

Reliable testers get around mistakes and bias by repeating tests at different times, or changing some of the parameters of the tests. Reliable testers are good at self-policing, and good at observation. Observation is important because you can never assume that a testing script is good enough - those have to be analyzed or tested like anything else. Reliable testers are the best skeptics, because they know that differences lurk everywhere and can trip up the best-laid plans. Reliable testers know that their reputations can take a hit if they take shortcuts or cover up incomplete work.

!. The listener. How many people, self acclaimed audiophiles, etc. are really trained in how to listen? Harmon has an application and testing procedures where they certify their listeners. They first have to pass all of the levels of each test on their application, THEN the person has to go down to Harmon Labs and get retested to ensure that they didn't guess or cheat in any way, and they also have their hearing checked. I have downloaded the app and have only taken a few of the tests and eventually got to Level 6, but I still have a ways to go and I simply don't always have a good environment since I live in an area where there is just too much outside noise and that leaks into my home environment so the noise floor is simply too high during the day. It's also a very time consuming application because it's not that easy once you get past about level 4 or 5 with these tests. I wish ALL magazine/on-line magazine reviewers of gear would become certified through the Harmon program because it would help give these reviews a little more credibility.

2. If the listener is NOT used to the room, the system and the setups being AB'd prior to being blind folded, it's going to be more difficult. Example. Familiarity with a system, room, content is important to pick out subtlties. Heck, when I get a new pair of speakers, etc., it takes me a while to figure out where the changes are because I have to go through a large catalog of music and literally spend months listening to whatever change I made to figure out how well the change is. It takes time for one's ears/hearing to get accustomed to a system. Sure, big changes in a system are pretty noticeable, but the differences in cables, amps, etc. are not HUGE changes all of the time, they are subtle, but still many times noticeable and it's just getting familiar enough to know where those differences are.

3. Adding switch boxes and additional cables. I'm sorry, but if there is added equipment to conduct an ABX test that's not normally used, then it shouldn't be used. Unfortunately, you can't compare much audio gear against another in a quick ABX test without adding switch boxes and cables. these devices have measurable amounts of resistance, capacitance, inductance or any combination and they can invariably make whatever you are trying to compare sound more like one another. Cables especially since adding cables to another cable is just not done in real life.

4. Listening SPL level, what passages to listen to and having control over that must be in the hands of the listener. If someone puts the SPL too high, it may cause short term hearing damage if the average SPL is above 85dB, or you may or may not be able to tell differences between equipment at only one level, sometimes you have to adjust the volume levels to hear what differences there are at lower SPL and then higher SPL, plus the listener must have control over what the volume is set at, what content they are listening to and be able to replay certain excerpts that they are really familiar with. Most ABX tests don't always allow the listener to have access to the volume level and control what the content is, etc.

5. Is the room soundproofed and with good room acoustics? Most people don't have this and that can make a big impact on how well we hear what's going on in a system. Are listening tests being done in perfect environments? Not always, especially at a trade show with hotel rooms in an environment where people are constantly going in/out of the room, talking, and making other noises.

6. How about those that listen to a product in a showroom at a dealership and then they take the product home and it doesn't sound anywhere like it did in the showroom? I can't stress how important room acoustics are and how that impacts what we hear or perceive in any listening of audio equipment.

What I see is a lot of relatively ignorant people that simply don't have much money or a high degree of experience with high end audio gear simply making up excuses to put down mfg, high end magazines, and "audiophiles" and they use the ABX test as some sort of valid reason. It's just as invalid as anything else. Yeah, I'm not suggesting that every audio mfg is telling the truth, etc. etc. Sure, in any industry there are those companies that market their products with a lot of suspicious claims, etc. And there is no real specific measurements that all mfg have to adhere to, and that measurements alone are not going to tell us how well something sounds all of the time. Plus you simply have to deal with people's subjectivity and the fact that everyone has a different skill set in terms of how to listen to audio gear.