| Columns Retired Columns & Blogs |

Were Those Ears So Golden? (DCC & PASC)

The whole field of subjective audio reviewing—listening to a piece of equipment to determine its characteristics and worth—is predicated on the idea that human perception is not only far more sensitive than measurement devices, but far more important than the numbers generated by "objective" testing. Subjective evaluation of audio equipment, however, is often dismissed as meaningless by the scientific audio community. A frequent objection is the lack of thousands upon thousands of rigidly controlled clinical trials. Consequently, conclusions reached by subjective means are considered unreliable because of the anecdotal nature of listening impressions. The scientific audio community demands rigorous, controlled, blind testing with many trials before any conclusions can be drawn. Furthermore, any claimed abilities to discriminate sonically that are not provable under blind testing conditions are considered products of the listeners' imaginations. Audible differences are said to be real only if their existence can be proved by such "scientific" procedures (footnote 1).

Footnote 1: I remember taking part in a test where the "listeners" were asked to discriminate between Scotch, Cognac, and Bourbon under blind conditions. To my surprise, it proved impossible to do so. Should we take the results of this test to mean that there are no differences in taste or odor between these distilled liquors? Of course not! The real conclusion to draw is that the design and organization of a blind test that will detect real subjective differences is far from trivial. If such a test produces a null result, there are two equally valid conclusions: either there was no detectable difference, or the test was insufficiently sensitive to reveal a detectable difference. Neither can be dismissed out of hand—unless, of course, the reason the test was run in the first place was to prove there wasn't a difference, hardly a "scientific" attitude.—John Atkinson

Given the conviction of my belief in the validity of listening, it was with great interest that I learned of the process by which Philips determined when their Digital Compact Cassette (DCC) encoding scheme sounded good enough to become the basis for a whole new audio format—a format that may well become the primary music carrier during the coming decades (see Peter Mitchell's and Peter van Willenswaard's "Industry Updates" in this issue).

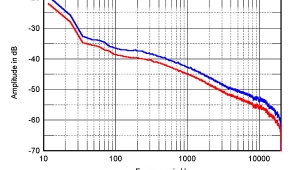

During demonstrations and technical discussions for small groups of journalists at the January 1991 Consumer Electronics Show, a Philips engineer described how their encoding process was evaluated during DCC's development. Without going over too much ground already covered by the Peters, DCC is based on drastically reducing the number of bits needed to encode an audio signal. While normal 44.1kHz, 16-bit linear stereo digital audio (CD) consumes 1.41 million bits per second, DCC's Precision Adaptive Sub-band Coding (PASC) generates only 384,000 bits per second. DCC's encoding method thus depends on less than one quarter of the normal data rate to represent the same audio signal. The analog Compact Cassette's inherent limitations of tape speed and width imposed these restrictions on the PASC encoding system.

Since PASC encoding relies on human hearing models to determine what information gets encoded and what doesn't, Philips decided that trained, critical listeners must be the ultimate judges of the system rather than measurements or evaluation by the system's designers. Philips thus organized the PASC development team into three groups: design engineers, critical listeners, and statisticians. The design engineers' job was to keep refining the encoding process based on feedback from the listeners. The statisticians were to analyze listening-test results and reach meaningful conclusions about what the listeners could and couldn't hear based on the data. The listeners were culled from quality-control inspectors at PolyGram.

The goal from the outset was to make PASC-encoded music indistinguishable from the CD source. The listeners could switch between CD playback and the CD after PASC encoding, performed in real time. If they could tell the difference between the two, the engineers were sent back to the drawing boards. Interestingly, even early versions of the system, with obvious sonic problems, sounded fine to the engineers. Moreover, attempts by the "golden ears" to teach the engineers critical listening skills were unsuccessful.

Nearly all the listening was done without blind testing—the listeners always knew when they were hearing PASC-encoded material. When the Philips engineer told the group of journalists this, two members of the audience, Tom Nousaine of The Sensible Sound and Audio's David Clark—both zealously anti-audiophile—were apparently shocked that Philips would rely on individuals' judgments of sound quality without blind testing (footnote 2).

After many iterations of the PASC encoding algorithm, some critical listeners still reported hearing differences between the CD and PASC encoding. When the listeners underwent blind testing and the data were analyzed, the statisticians concluded that there was no statistical significance to the results: the listeners could not distinguish between CD and PASC. However, the listeners vehemently asserted that they could detect differences, despite the supposedly infallible scientific proof that they couldn't.

Now, the DCC project managers were faced with a monumental dilemma: were they to believe the "scientific" conclusion—that the listeners were imagining the differences—or should they accept the listeners' impressions despite the lack of any "objective" proof. Indeed, not only was there no scientific evidence the listeners could hear differences, there was overwhelming evidence that they couldn't hear differences.

This decision was not trivial. The Philips team wasn't designing and evaluating a piece of equipment—they were setting the standards for an entire format that may become the most popular music-storage medium well into the 21st century.

On one hand, it would have been easy for the project managers to accept the scientifically reached conclusion—that the listeners couldn't hear the difference between CD and PASC encoding. This decision would be highly immune to attack if sonic flaws were discovered later—the managers could point to the blind listening-test results as "proof" that PASC encoding was inaudible. In addition, believing the scientific evidence rather than the listeners would allow the project managers to move forward to the next stages of DCC development. The encoding algorithms, once settled on, needed to be committed to silicon chips—a long and expensive process that had to be finished before the format could proceed.

However, if the managers chose to believe the listeners—who just knew they could hear the difference but couldn't prove they could—it would mean delaying the entire project, with the attendant expense. It would be difficult to defend the decision to spend that extra time and money, a decision based solely on listeners' unprovable impressions. Moreover, I suspect that further refinements in the encoding algorithm were yielding a diminishing return as the technology was pushed to its limit. Another round of improving the encoding process would likely provide few sonic benefits.

After what must have been long and careful consideration, the Philips project managers decided to believe the listeners. Despite a number of factors, including the inability of others to hear these reported differences, the listeners' failure to pass blind testing, and the statisticians' conclusion that no differences were heard based on the data, Philips threw out the scientific evidence and went with the listeners' judgments. PASC encoding was subsequently refined until the listeners were completely satisfied that they couldn't tell the difference between the CD source and PASC—and not under blind conditions. If David Clark was shocked to learn that virtually all the critical listening wasn't done under blind conditions, one can imagine his reaction to learning that the scientific evidence—blind testing results—was thrown out in favor of unprovable and anecdotal listening impressions.

This remarkable episode represents a triumph of audiophile values. First, the decision to evaluate the technology with trained, critical listeners rather than measurement is in itself significant. Second, it can be considered a rejection of the all-too-prevalent view that anecdotal listening impressions are worthless and have no place in audio science. This closed-minded view asserts that if a phenomenon isn't scientifically provable, it doesn't exist. Furthermore, I see these events as an indictment of blind listening tests, whose validity has long been questioned by the audiophile.

Philips's approach to creating the PASC encoding system is exactly the way audio technology and equipment should be developed: with critical evaluation by trained listeners. However, this isn't a radical concept—ask any high-end designer how he develops a product. What makes the PASC development story so significant is that they entered the project with the expectation that the statisticians could be the ultimate judges of PASC encoding's audibility based on the listening-test data. Remember, these were scientists, not audiophiles. They had full confidence (at least initially) that application of scientific method would provide an incontrovertible answer to their question.

Moreover, the significance of Philips's decision to believe the listeners is heightened by other factors. Philips is a huge, sprawling bureaucracy, not a high-end designer in his lab. It must have taken a lot of explaining to convince the executives not directly involved that more time and money was needed, even though PASC encoding met the acceptance criterion established before the project was started; ie, no statistical evidence of hearing a difference under blind conditions.

On the way down to the hotel lobby after the press session, I happened to share the elevator with Tom Nousaine and David Clark. For some incomprehensible reason, David Clark regards my writings as a complete rejection of rational, scientific thought and an embrace of mysticism, a view he had expressed to me the previous day during a chance encounter. Apparently feeling defensive after the press conference's revelations, he turned to me in the elevator and said, "What a remarkable system that produces CD quality sound with such a low data rate. And it was all made possible by science."

Somehow, I think he missed the point.

Footnote 1: I remember taking part in a test where the "listeners" were asked to discriminate between Scotch, Cognac, and Bourbon under blind conditions. To my surprise, it proved impossible to do so. Should we take the results of this test to mean that there are no differences in taste or odor between these distilled liquors? Of course not! The real conclusion to draw is that the design and organization of a blind test that will detect real subjective differences is far from trivial. If such a test produces a null result, there are two equally valid conclusions: either there was no detectable difference, or the test was insufficiently sensitive to reveal a detectable difference. Neither can be dismissed out of hand—unless, of course, the reason the test was run in the first place was to prove there wasn't a difference, hardly a "scientific" attitude.—John Atkinson

Footnote 2: David Clark is a leading promoter of the view that all amplifiers sound the same. As designer of the ABX double-blind testing box, Mr. Clark regards blind testing as the great exposer of audiophile fraud and delusion. At an Audio Engineering Society conference in May 1990, he drew a parallel between audiophiles and believers in a flat earth.—Robert Harley

- Log in or register to post comments