| Columns Retired Columns & Blogs |

Blind Listening Letters part 4

Listening tests & statistics

Editor: As an experimental psychologist, I was glad to see an attempt to apply some of the methods of my science to the issue of audible differences among amplifiers ("As We See It," Vol.12 No.7). While you did a great many things right in setting up this experiment, there are some serious flaws in your statistical analysis which have important implications for interpreting your results.

Editor: As an experimental psychologist, I was glad to see an attempt to apply some of the methods of my science to the issue of audible differences among amplifiers ("As We See It," Vol.12 No.7). While you did a great many things right in setting up this experiment, there are some serious flaws in your statistical analysis which have important implications for interpreting your results.

The basic problem is that your respondents were (as you sort of acknowledge in your footnote on p.17) biased to respond "different"—ie, that they could hear a difference between the amplifiers being compared. In fact, it can be calculated from your Table 3 that 2228 (approximately 63.1%) of the responses were "different." Given this fact, and the fact that overall the amps really were different on 53.6% of the trials, the expected performance by chance can be calculated as approximately 50.9%. (This calculation is based on the assumption that only the bias to respond "different," and not the actual situation, determines responses. The number of correct responses expected by chance is then 0.631 (the expected proportion of "different" responses) x 1892 total "different" trials, plus 0.369 (the expected proportion of "same" responses) x 1638 total "same" trials. (The result of this calculation is about 1798, or 50.9% of the total.) This may seem like a small variation from your assumed chance level of 50.0%. In fact, however, the overall percent correct of 52.3% does not differ significantly from the correct chance level of 50.9%. (The Chi-square with one degree of freedom is approximately 1.34.) Therefore, the data do not show a statistically significant tendency to discriminate between amplifiers—Larry Archibald's comment (footnote p.17) is right on the mark.

A second comment concerns the so-called "differences between ability to distinguish amp difference and ability to determine sameness." This apparent difference is simply an artifact of the bias to respond "different." As the calculations above show, the expected (by chance, given the overall bias) levels of performance for "same" and "different" presentations are 36.9% and 63.1% respectively—indistinguishably different from the obtained levels of 38.3% and 64.4% (Table 3). Thus, any "caviling about test conditions" might be rejected, but not on the basis of this huge apparent difference.

A third (and final) comment concerns differences among the musical selections. While I have not done any detailed calculations like those above for the individual selections (this seems likely to be futile, given the reduced statistical power when using just part of the sample), note that the bias to say "different" varies substantially—as percent correct for "different" goes up, the percent correct for "same" goes down. What may be really going on here is simply this: Audiophiles believe that some types of music are more likely to reveal differences than others, and adjusted their response biases accordingly.

The upshot of all of this is that your data do not demonstrate audible differences between the amplifiers tested, under the conditions arranged. In statistical terms, the null hypothesis of no difference cannot be rejected. However, it is important to note what this conclusion (and the preceding argument) does not mean:

1) It does not mean that your respondents were deliberately biased to respond "different";

2) It does not mean that the null hypothesis of no difference is true—simply that it cannot be rejected with an acceptable degree of probability on the basis of the current data;

and 3) it does not mean that the gist of (most of) the admirably restrained conclusions on p.20-21 are incorrect. I suspect the main lesson of this exercise in fact is just how difficult methodology for this kind of research really is.

By the way, I believe that there are real audible differences among amplifiers—though I'll probably never be able to afford most of those reviewed by Stereophile.—Rich Carlson, Ph.D., Pennsylvania State University, University Park, PA

Listening tests & contingency tables

Editor: I did not then have time to read or analyze the results of your listening test in detail, but I was struck by a certain consistency in the reported results. I copied a few of the key results for later study and offer my analysis for your consideration.

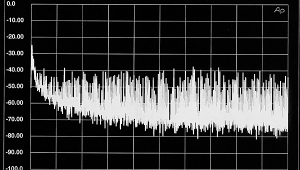

Using the normal approximation of the binomial distribution, I calculate a probability of approximately 0.065% of obtaining, purely by chance, any number of correct responses outside the range 1685-1845 (1765±80) in a total of 3530 trials. Thus, while the proportion of correct responses in the test, 1846/3530 or 0.5229, is only slightly greater than 0.5, it has a high degree of statistical significance.

An interesting alternative interpretation can be arrived at by employing a common statistical tool: the contingency table. This technique tests for dependence between two classes of variables; in this case, the test stimuli and the participants' responses. The first set of classifications examined is illustrated in the contingency table:

| AB | BA | AA | BB | ||

| "Different" | 743 | 475 | 503 | 507 | 2228 |

| "Same" | 391 | 283 | 320 | 308 | 1302 |

| Total | 1134 | 758 | 823 | 815 |

The statistic employed to test for dependence in the contingency table is Chi-squared. The procedure for calculating Chi-squared for a contingency table can be found in virtually any statistics text. Chi-squared for this contingency table is calculated to be 4.581. At the 0.5 level of significance, the experimental value of Chi-squared must be at least 7.851 to permit dependency to be declared in the 2 x 4 contingency table. Using the classifications of the above tale, one would conclude that the responses were independent of the stimuli.

One might reasonably argue that the 2 x 4 contingency table is too demanding and that the stimuli should be divided into only two classes, as were the responses. This classification is tested in the following contingency table:

| Amplifier Sequence | |||

| Different | Same | ||

| Response | (AB or BA) | (AA or BB) | Total |

| "Different" | 1218 | 1010 | 2228 |

| "Same" | 674 | 628 | 1302 |

| Total | 1892 | 1638 | 3530 |

Chi-squared for this table is calculated as 2.772. The critical value, again at the 0.05 level of significance, is 3.841. Thus, this contingency table also indicates that the responses were independent of the stimuli.

The 0.05 level of significance employed in the above analyses corresponds to a 5% risk of falsely declaring dependence. Other levels of significance could have been selected. A level of 0.05 is commonly employed in research applications and is not generally regarded as highly demanding.

The two contingency calculations described above suggest that the participants in Stereophile's listening test were inclined to perceive a difference between the two test segments of recorded music regardless of the sequence of amplifiers employed. In other words, the responses were independent of the stimuli and reflected a relatively consistent 61-66% bias in favor of hearing a difference. It is unclear whether this consistent bias was personal or pointed to some unrecognized flaw in the design or execution of the experiment. In the light of this interpretation of the results, the statistical significance of the 1846 correct responses in 3530 trials is called into question. A bias toward hearing (or reporting) differences favors correct responses in trials employing two different amplifiers and incorrect responses in trials in which the same amplifier is repeated. In the overall test, the condition of different amplifiers was employed in 1892/3530, or 53.5% of the trials, thus creating an overall bias in favor of correct responses.

While it is difficult to determine, after the fact, the cause of the bias described above, I would guess that it reflects some flaw in the design or execution of the experiment. Specifically, I suspect that it is a consequence of group behavior. The sonic differences which were being evaluated in this test were probably, at best, very subtle, leading to much uncertainty in the minds of participants. It would be reasonable to suspect that there were participants in most test sessions who believed that they heard sonic differences and who, further, telegraphed that belief verbally, by body language or in some other similar manner. I suspect that participants who were having great difficulty detecting sonic differences may have been influenced as much by the behavior of their peers as by the intended test variable. In short, I am suggesting that the flaw in the experiment may have been in conducting the test, in part, as a social event (fun), rather than as a rigorous test (work).

In the absence of a basic flaw in your experiment, the above statistical analysis suggests to me that Stereophile's amplifier test may have neatly pinpointed a key element in the high-end audio business: a propensity (is compulsion too strong a word?) on the part of aficionados to hear differences. It is intriguing to speculate how revealing your test might have been if, for instance, the audiophile's archetypical villain, the deadly Pioneer receiver, had been included. In view of the questionable ability of the participants in this test to distinguish between amplifiers of distinctly different design and measurably different frequency response, the prospect of similarly designed tests of speaker cables, interconnects, CD rings, or other such sonic, though not economic, trivia is almost too delicious to contemplate.—Paul F. Sanford, Portsmouth, NH

Listening tests & compromised results

Editor: I read with great interest your article on blind listening tests of amplifiers in the July 1989 edition of Stereophile, since this is an area in which I am quite interested. Nothing would please me more than to have a nonsubjective answer to the question: Can you really tell? (I suspect you can tell the difference between amplifiers, but I'd like to be firmly convinced.) I also appreciated the care you took in your testing and the fact that you correctly chose to ask the listeners only to decide whether they could tell a difference between the sound quality of music played on different amps. As you point out, this avoids adding listener preference to the test, which is a significant randomizing factor. I was disappointed, however, in other aspects of the test's construction. While you wisely decided to choose the amplifiers at random and to discard selections in which the same amplifier occurred too many times in a row, you did not symmetrize your test with respect to the number of times the amplifiers were switched and kept the same. This seriously compromises your test results.

As pointed out by Larry Archibald in footnote 9 on p.17, your results indicate that the listeners were strongly biased toward hearing a difference between samples whether or not the amplifiers were switched. This is undoubtedly a normal reaction for any person who is asked to participate in such a test, and such biases must be carefully removed from the results. You argue in the same footnote that you don't think that this affected the results since "anyone answering 'different' to every presentation would still have scored no better than chance, ie, 50%." However, this is only true if there were an equal number of times that the amplifiers were changed and kept the same. This is not true of your test. This is exactly my point!

From the results in Table 2, we can deduce that people selected "different" roughly 65% of the time. If we make this assumption and further assume the listeners cannot tell the difference between amplifiers, then applying this percentage to the data in Table 2 (ie, the number of times A-B, B-A, A-A, and B-B were presented to the listeners), I calculate that the listeners would have answered correctly 51.1% of the time due to this effect alone! This is quite close to your measured result of 52.3% correct answers. While there may still be some evidence that the listeners can tell a difference between amplifiers (since 52.3% is larger than 51.1%), this must considerably reduce the significance of your test.

This same effect may also strongly influence your results concerning which type of music is best to differentiate between amplifiers. As you'll note from Table 4, the music selections that did best were exactly those pieces which had the highest percentage of amplifier changes during the test. Indeed, the drum recording, which you concluded was ineffective for telling amplifier differences, was the only piece for which the number of times that the amplifiers were switched and kept the same were roughly equal! This really seems damaging to your case.

Because of these flaws, I remain unconvinced that listeners can tell a difference between amplifiers. Please don't let this discourage you, however! I'm looking forward to more and better tests. But next time, please be careful to remove this bias by symmetrizing with respect to amplifier changes. By all means, select when to switch amplifiers at random, but ensure that there are as many samples of A-B, B-A, A-A, and B-B in your test.—Richard Peutter, Center for Astrophysics and Space Sciences, University of California, San Diego, CA

- Log in or register to post comments