| Columns Retired Columns & Blogs |

Kudos Stereophile for the writing all those years back! Great to see the writers really dig into the story and provide the technical details for those curious about the new technology wishing to understanding how these things worked...

A couple of thoughts as they do still resonate through all these years:

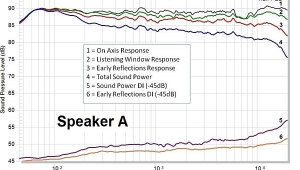

1. Lossy audio. Though nobody considers it "perfect" audio, clearly it sounds good. Remember that PASC is essentially MPEG-1 Layer 1 audio at 384kbps. This type of audio compression has been easily superseded by Layer II and III since. As such, a modern MP3 (Layer III) at 320kbps with modern psychoacoustic modeling is significantly more accurate than PASC ever was. Remember, despite all the negatives thrown at MP3 and lossy encoding, save for awful encoding software and low bitrate 128kbps files back in the day, though not preferred if lossless is available, it's important for folks not to be worried about 256kbps AAC (iTunes) or modern 320kbps MP3 music. For portable players where clearly walking around with headphones or riding in a subway to work, surely something like hi-res lossless is a total waste of time and money.

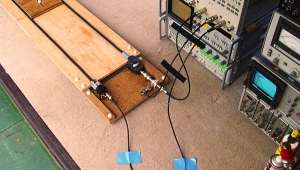

2. Great job Philips for setting up actual A/B comparisons. And even going through the extra mile of rewiring for L-R listening! The obvious modern analogy of a company selling an encoding scheme is with Meridian and MQA. So where is this level of openness with MQA these days? Where is the detailed A/B comparison of MQA? And where are the journalists willing to critique the claims with a proper demo?

Again great article from the archives. But it's also a sad reminder of how far the level of discourse has fallen these days in the audiophile media.