| Columns Retired Columns & Blogs |

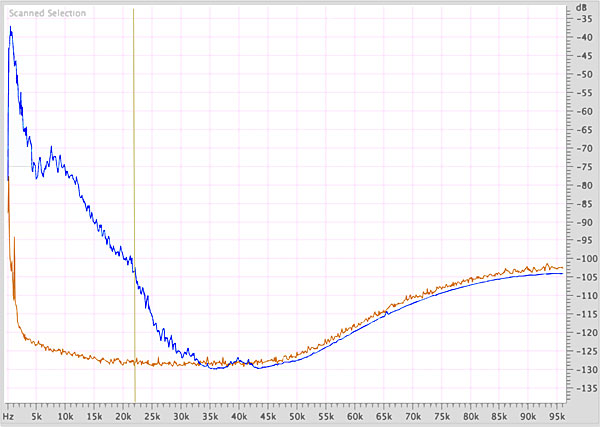

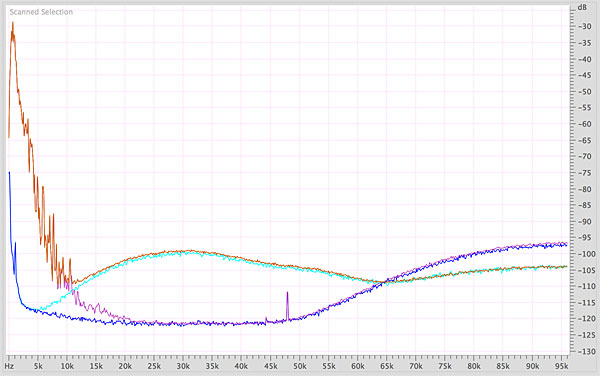

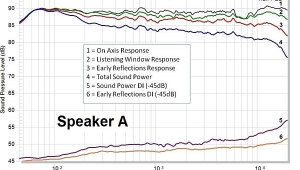

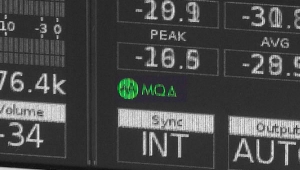

I get it. MQA aims to toss the noise living up where can’t hear anything anyway, while keeping the music’s artifacts experienced-yet-not-directly-heard. I’ll buy that, as should any audiophile who implicitly understands that commonly published specs never describe sound “quality” or “performance.”

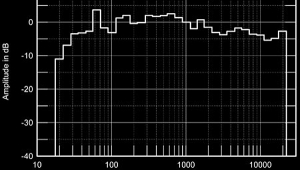

I’m reminded of every cassette deck magazine ad that claimed flat response out to 18kHz or better, as if that was all that mattered.

This article is the best explanation I’ve read about the file/stream size aspect. Nicely written, Jim.