| Columns Retired Columns & Blogs |

Super Audio CD: The Rich Report

Although Philips invented the Compact Disc, it was only when Sony got involved in the early 1980s that it was decided—at the prompting of conductor Herbert von Karajan, a close friend of Sony's then-president Akio Morita—that the CD should have a long enough playing time to fit Beethoven's Ninth Symphony on a single disc (footnote 1). Even if the conductor was using very slow tempos, and even given the minimum pit size and track pitch printable at the time, the 16-bit data and 44.1kHz sampling rate they settled on gave them a little margin.

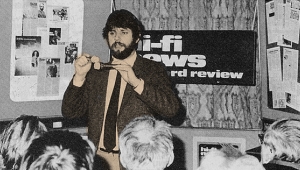

Footnote 1: I was present at the 1981 Salzburg, Austria press conference where Morita-san first presented this explanation of why Sony had asked for an increase in the planned disc size. (This was also the occasion where HvK made his infamous "All else is gaslight" quote about the CD.) Perhaps more important, Sony had persuaded their Dutch partners that the planned 14-bit word length was insufficient to achieve the desired sound quality. For me, the unsung hero of the CD concept was Toshi Doi, who came up with the error-correction code and was a forceful advocate for 16-bit word lengths. Dr. Doi is currently a leading light in Sony's robotics research efforts.—John Atkinson

From the beginning, it was pointed out that Linear Pulse-Code Modulation (LPCM) encoding at 16-bit/44.1kHz may not have been good enough to be audibly transparent. Research indicated that a signal/noise ratio of greater than 98dB would be needed before the ear would discern any artifacts in its most sensitive range. In addition, the choice of a 44.1kHz sample rate left a transition or guard band of just 2kHz between the audio passband and the stopband for the anti-alias (record) and reconstruction (playback) filters.

From the beginning, it was pointed out that Linear Pulse-Code Modulation (LPCM) encoding at 16-bit/44.1kHz may not have been good enough to be audibly transparent. Research indicated that a signal/noise ratio of greater than 98dB would be needed before the ear would discern any artifacts in its most sensitive range. In addition, the choice of a 44.1kHz sample rate left a transition or guard band of just 2kHz between the audio passband and the stopband for the anti-alias (record) and reconstruction (playback) filters.

This narrowness of the guard band has always been controversial. With a 44.1kHz sampling frequency, signals higher in frequency than 22.05kHz will alias—ie, produce enharmonic image products in the audioband—and thus need to be filtered out. This requires very steep low-pass filters that can, some papers claim, cause audible problems. The 48kHz sampling rate of DAT recorders and DVD-Audio players doubles the guard band, but it is not widely reported how this small increase in sampling rate could make such a big difference in perceived sound quality. In recent AES preprints, the most optimistic assumptions about human hearing put the maximum sample-rate requirement at about 60kHz (footnote 2).

Now the questions about digital technology as embodied in the CD format are about to disappear. The 4.7-gigabyte capacity of the DVD-Audio disc, achieved by a smaller pit size, closer track pitch, and a laser of slightly shorter wavelength, can give us 5.1 channels with a 24-bit depth, and 24 bits give a maximum S/N ratio of 144dB. Distortion is at that level too. Such low levels of noise and distortion are well beyond the limits of human hearing—and even beyond the limits of electronics, for such fundamental reasons as thermal noise in circuits and transducers.

On the sampling-rate front, 96kHz gives us a guard band of 28kHz between the nominal 20kHz top of the audioband and the Nyquist Frequency of 48kHz. This can be used to make the anti-alias filter less sharp—ie, with a less steep initial rolloff—which will reduce pre- and post-ringing on impulses. Alternatively, by staying with a 2kHz guard band, we can record up to 46kHz at 144dB signal/noise, if you think you can hear that high (footnote 3). (Papers have shown that some young people can hear past 20kHz.)

Any way you slice it, its 24-bit data size and the 96kHz sampling rate possible for 5.1-channel recordings puts DVD-A well beyond the limits of audible problems. However, the DVD-A launch was delayed until copy-protection issues had been worked out. [And even with players available as of summer 2000, the copy-protection issues had not been fully resolved.—Ed.] The good news is that, because it is based on the Digital Versatile Disc technology, which benefits from significant economies of scale, the first-generation DVD-A players will be relatively inexpensive.

Meanwhile, there is another competitive standard to consider: the Sony/Philips Super Audio CD. Rather than use LPCM, SACD uses a proprietary fixed delta-sigma modulation code, known as DSD. As delta-sigma modulation technology is well-known to audiophiles, I will only briefly summarize it here.

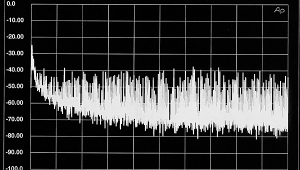

In the simplest form of delta-sigma modulation, a 1-bit converter is oversampled in a feedback loop. By definition, a 1-bit system—it's either on or off—suffers from very high quantization noise. However, the feedback loop shapes the noise energy so that noise is acceptably low in the audible band but high outside it.

Audiophiles have been suspicious of negative feedback for years, but for reasons I don't understand, they appear to have given delta-sigma modulation their implicit approval. Unlike feedback loops in amplifiers, where the loop circuit is already somewhat linear, the feedback loop in a delta-sigma converter is wrapped around the most nonlinear component we can imagine: a 1-bit A/D converter.

Why would anybody do this? As two points define a straight line, by definition a 1-bit converter has zero linearity error. With noise-shaping we can have a converter with as many bits' worth of effective resolution as we like, as long as our oversampling rate is high enough and the noise-shaper is of a high enough order. The delta-sigma modulator is an effective way to make high-resolution converters cheap by replacing complex, multibit ADCs and DACs with simple, cheap, single-bit units. In the real world, of course, that simple converter is very complex to design: noise and distortion must be considered in the ADC's noise-shaper and sampler, and in the charge-to-voltage converter and reconstruction filter of the DAC.

We get no free lunch with a delta-sigma modulator. Using a high degree of negative feedback around a strong nonlinearity can give rise to idle-channel tones and limit cycles. (In effect, the converter behaves as an oscillator—it puts out a repeating pattern even when there is no input.) Numerous papers have been published in both the AES and IEEE Journals describing this phenomenon. In particular, the difficulty in analyzing, modeling, and eliminating these non-ideal effects has been described.

These quasi-periodic oscillations have been shown to be audible, at least with test signals, by me and Steven Norsworthy in the AES preprint mentioned in the "References" sidebar. They are easy to hear even using Matlab computer simulations (output files can be played through the computer's soundcard), but their quasi-periodic nature makes them hard to measure, even in computer simulations in the frequency domain. In that paper, and in previous work by Steve, it was shown that the tones can be reduced by increasing the oversampling rate and by using a coder of more than 1-bit quantization. In fact, all high-performance ADCs using delta-sigma modulation now use a quantizer with at least three codes (Crystal), four codes (AKM), or five codes (dCS). Oversampling rates are universally 128x (relative to 44.1kHz) or more.

Footnote 1: I was present at the 1981 Salzburg, Austria press conference where Morita-san first presented this explanation of why Sony had asked for an increase in the planned disc size. (This was also the occasion where HvK made his infamous "All else is gaslight" quote about the CD.) Perhaps more important, Sony had persuaded their Dutch partners that the planned 14-bit word length was insufficient to achieve the desired sound quality. For me, the unsung hero of the CD concept was Toshi Doi, who came up with the error-correction code and was a forceful advocate for 16-bit word lengths. Dr. Doi is currently a leading light in Sony's robotics research efforts.—John Atkinson

Footnote 2: See, for instance, Robert Stuart, "Coding Methods for High-Resolution Recording Systems," presented at the 103rd Audio Engineering Society Convention, New York, 1997. Available from the AES as preprint #4639, and in expanded form from the Meridian website library as Coding2.PDF .—John Atkinson

Footnote 3: For a discussion of this subject, see my "What's Going On Up There?" in the October Stereophile, pp.63-73.—John Atkinson

- Log in or register to post comments