| Columns Retired Columns & Blogs |

What's Going On Up There? Letters

Letters in response appeared in the December 2000 issue of Stereophile:

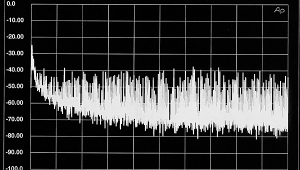

Footnote 1: For human hearing, see The Shannon Space, fig.25.

Better sound quality and ultrasonic spectra

Editor: Thanks for John Atkinson's interesting and informative article about sounds above 22kHz. I was already pretty convinced, after reading the James Boyk paper referenced in the article, that musical instruments do indeed make sounds above 20kHz, and JA's results nicely confirm this. I agree that the most likely reason for audible improvements of sampling rates higher than 96kHz, or of upsampling, is getting the filter artifacts further away from the music, which can only be a good thing.

Perhaps another reason low frequencies sound better at higher sampling rates is that harmonics of a sound with a low fundamental are that much closer together [than with a high fundamental]. Even if the object making the sound doesn't excite really high harmonics, the ones that are present are fine in structure and in inverse proportion to the fundamental. In this sense, the lower the frequency, the greater potential richness of harmonics.

I address some of these issues in my listening notes about the Assemblage DAC-3 and D2D-1 upsampler/jitter reducer.—Jeff Chan, jeff@supranet.net

Human hearing and ultrasonic spectra

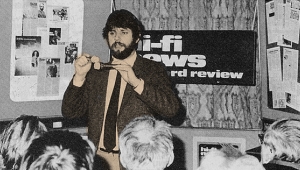

Editor: I read with great interest John Atkinson's article "What's Going On Up There," in the October Stereophile (pp.63-71). His analysis of the spectral content of music sources is very useful in demonstrating the "supersonic" content of instruments.

I appreciate very much his reference to my work on coding requirements for high-quality audio. For information, the March 1998 Audio article referenced by JA was an abstract of a key paper I gave to the Audio Engineering Society ("Coding Methods for High-Resolution Recording Systems," presented at the 103rd AES Convention, New York, 1997. Copies can be obtained from the AES as Preprint 4639. There is a more complete but unpublished version, "Coding High Quality Audio," available for download.

However, John quoted me somewhat out of context, and I would like to clarify this if I may. Proposing any objective limits to human hearing is brave and certain to be incorrect, but at the time I felt it was necessary to put a stake in the ground to try to ensure that new carriers and playback equipment were neither over- nor under-specified.

It is fairly obvious that analysis of both natural and manufactured sounds will find content above 20kHz—examples include percussion, synthesizers, muted trumpets, and bats. It is also no surprise that JA discovered content above 32kHz. The important point to differentiate is that my study was entirely born of human psychoacoustics and was endeavoring to establish the limits required to code a high-quality audio channel so that all human-detectable sounds would be conveyed.

In these papers I analyzed the human hearing system from an information viewpoint and came to the conclusion that an optimally shaped channel operating with PCM coding at 52kHz and 11-bit resolution could replace the channel between the cochlea and the higher cerebral processes (footnote 1). This was an extreme analysis from a pure information perspective, and the suggestion that a 20-bit/64kHz PCM channel would be minimally adequate came from transforming the overall frequency response and threshold of human hearing from the perspective of incident sound. Put simply, there will be sounds above 32kHz; the question is, are they detectable in the context of the music content or channel noise? Time will tell, but until then I am comfortable with the assertion that so far as "on-air" content is concerned, 32kHz does represent a probable upper limit.

Now, that is not quite the end of the story, because we cannot just double 32kHz to get an optimum sampling rate. First, the delivery system has to exceed this innate capability if the thresholds are not to add. Second, human hearing manifests significant nonlinearities, especially in threshold regions, and sampling theory alone does not cover all the points. Therefore we separate out these four questions:

1) What is the minimum acoustic frequency response?

2) What sampling rate should be used?

3) What overall dynamic range do we need, and what is its spectral distribution?

4) How should the delivery channel be coded?

I have addressed the first question. The ARA, in its study (footnote 2, available for download), suggested that 26-32kHz "on air" was sufficient. My later paper tweaked that a bit, as JA noted. However, in neither case did we come up with bandwidth needs of 48kHz, or, especially, 96/100kHz. This point tells us a lot about what tweeters and amplifiers actually need to do (footnote 3). The justification for separating questions 1 and 2 is that, with systems whose tweeters do not even reach 20kHz (or for listeners who no longer hear above 15kHz), the difference brought by sampling at 96kHz compared to 44.1kHz can still be perfectly obvious.

To answer the second question, we need to adopt a sampling rate that is sufficiently higher than 64kHz (=2 x 32kHz) to ensure that real-world digital implementations do not suffer from band-edge anti-alias problems, and have sufficient digital-domain speed to keep significant filter effects above the "audible area." So in one sense I suggested that the faster the digits go, the better; that the sensible choice was 2x (ie, 88.2kHz or 96kHz). The engineer in me feels sure that when we have learned how to optimize the high-speed digital channels, any differences we may find now due to equipment-design issues between the 2x and 4x rates (ie, 176.4kHz and 192kHz, or DSD) will disappear.

To address the third question, we start from my conclusion that a 20-bit channel was the minimum. However, the assertion was qualified by pointing out that this was the required overall performance of the chain. We need to remember that each step, each signal process—be it conversion or modification—at minimum adds the noise equivalent to optimum dither in a PCM system, and in a 1-bit chain also adds unrecoverable nonlinearity. In a typical disc scenario there might be at least five processes between microphone and speaker—including, of course, mastering and editing. My paper points out that if any one of these processes is incorrect and performs even trivial truncation, then 20 bits is the absolute minimum resolution to achieve overall. Hence, and for the reason JA noted—that it is a multiple of 8 bits—24 bits has been chosen for premium work. 24 bits gives us sufficient dynamic range so that even if some equipment is not 100% and several processes happen on the way, the errors introduced by these real-world events can be benign, from a human audibility standpoint.

Finally, we come to the fourth question: How should the delivery channel be coded? Along with many workers in this field, I have steadily advocated linear PCM. PCM has these very clear benefits:

• An optimum dither is known that allows theoretically infinite amplitude and time resolution, albeit with a benign noise floor (footnote 4).

• PCM allows perfect linearity, at least in the digital domain.

• PCM can be losslessly compressed efficiently.

Therefore, it becomes clear why we proposed 2x rates: to give clear headroom for the human listener, and then to recover this by using lossless compression—hence was born MLP.

It is instructive to note that none of these benefits applies to 1-bit delta-sigma coding, such as the 64x method used for SACD. Key references on this topic include Malcolm Omar Hawksford's "Bitstream versus PCM Debate for High-Density Compact Disc," and Lipshitz and Vanderkooy (footnote 5).—Robert Stuart, Meridian Audio, Cambridge, England

Footnote 1: For human hearing, see The Shannon Space, fig.25.

Footnote 2: Acoustic Renaissance for Audio, "A Proposal for High-Quality Application of High-Density CD Carriers," private publication, (April 1995). Reprinted in Stereophile, August 1995, and in Japanese in J. Japan Audio Soc. 35, October 1995.

Footnote 3: Meridian Audio, "Advice for Mastering and Authoring DVD-Audio Content," available on the DVD Forum website.

Footnote 4: M.A. Gerzon and P.G. Craven, "Optimal Noise Shaping and Dither of Digital Signals," presented at the 87th Convention of the Audio Engineering Society, J. Audio Eng. Soc. (Abstracts), December 1989, Vol.37, p.1072, Preprint 2822.

Footnote 5: "Why Professional 1-Bit Sigma-Delta Conversion is a Bad Idea," Stanley P. Lipshitz and John Vanderkooy, presented at the 109th AES Convention, September 2000. [See Barry Willis' report in the December issue's "Industry Update."—Ed.]

- Log in or register to post comments