| Columns Retired Columns & Blogs |

The editor's introduction incorrectly includes Monkey's Audio in the list of lossy compression methods. It is indeed lossless.

Rick.

Dr. Brandenburg noted that there was a significant difference in sound quality between ASPEC's 64kb/s and 96kb/s data rates, but not much difference between 96kb/s and 128kb/s (64kb/s is about 1/11th the data rate for 16-bit linear PCM digital audio as found on a CD). ASPEC has scored well in subjective testing compared to other coders, but is more complex and introduces a greater delay time in decoding the signal.

The final presentation was by Christer Grewin, who headed the official subjective testing of these systems at the Swedish National Radio Company. He reported on the methodology, results, and conclusions of these tests. Swedish Radio's mandate was to determine which codec (bit-rate reduction encoder/decoder system) was the best sonically, and also to decide if the best system's audio performance was adequate for use in Digital Audio Broadcasting. Clearly, this was not a trivial undertaking. I was thus very curious about how the tests were devised, and the various systems' subjective performances.

I was heartened by the paper's introduction: "Subjective assessments or listening tests have always played an important part in the evaluation of audio equipment. Maybe even more so today. A major part of today's (at least professional) audio equipment, show electric data with no or very little degradation compared to a straight wire. Still, audible differences can be detected.

"For certain types of digital audio equipment there are no adequate methods of objective measurements available."

With that auspicious beginning, Mr. Grewin described the test methodology. First, 60 "expert" listeners were chosen; 23 were appointed by Swedish Radio, 24 came from four codec development groups, and the rest were selected from the AES and EBU (European Broadcast Union). Half the listeners were from outside Sweden. The CCIR listening test recommendations (CCIR 562-2) states that any system intended for high-quality broadcasting or reproduction shall be assessed by "expert" listeners exclusively. It was not specified what criteria were used to determine who was an "expert listener" for these tests.

Ten musical selections were chosen to evaluate the codecs, based on previous auditioning through the various codecs. They were: 1) Suzanne Vega (unaccompanied voice), 2) Tracy Chapman, 3) glockenspiel, 4) fireworks, 5) Ornette Coleman's Dreams, 6) a bass synthesizer, 7) castanets, 8) male speech, 9) bass guitar, and 10) trumpet. The playback system wasn't specified, but the D/A converter was a Philips DAC 960.

The four codecs evaluated were MUSICAM, ASPEC, ATAC (called ATRAC in Sony's recordable Mini-Disc), and SB/ACPCM, a less well-known system. Each was evaluated at three bit-rates: 128kb/s, 96kb/s, and 64kb/s. The latter two systems weren't fully implemented when the tests were conducted; consequently, no data were provided on these two systems. Because each codec introduces a different amount of decoding delay which could identify the particular codec, a system was devised in which the signals from the reference source and each codec were put through a digital delay line, making the delays equal.

The listening tests were "Triple Stimulus, Hidden Reference, Double Blind." Each listener sat with a box that had a level control and buttons for selecting the presentations and grading the codec in relation to the source. The subject was allowed to switch between three presentations: A, B, and C. Presentation A was always the reference signal from a DAT machine with no codec (16-bit linear PCM), and thus known to the subject. B was either the same signal encoded and decoded by the codec under evaluation (in real time), or the unaltered reference signal. C was either the reference or the codec, but the opposite of presentation B. The determination of whether B or C was the codec was chosen randomly.

The listener was to first identify if B or C was the codec, then rate the introduced degradation. The "CCIR Impairment Scale" was used, offering five choices of degradation. A "1" rating was classified as "very annoying," "2" was "annoying," "3" was "slightly annoying," "4" was "perceptible but not annoying," and a score of "5" was "imperceptible." Throughout the test, more than 20,000 grades were obtained, half of them on the hidden reference and half on the codecs.

The analysis of the codecs' performance was broken down according to musical selection. This yielded some interesting information: Some music was much more revealing of the codecs' degradation than other music. The most critical appeared to be Suzanne Vega's unaccompanied voice, the least critical the bass synthesizer and fireworks.

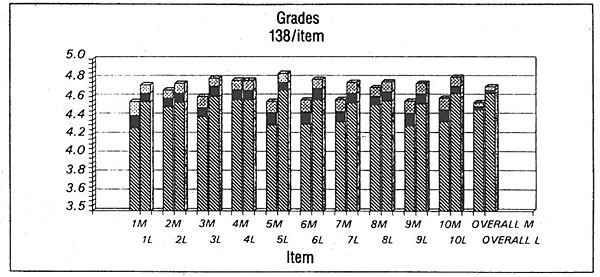

Fig.1 is a graphical representation of the data from one test, comparing MUSICAM at 128kbs to the reference. The numbers 1–10 across the bottom correspond to the ten musical selections listed above. The left-hand bar over each number is the codec's rated performance on that particular musical selection, the right-hand is the reference. The greater the difference between the bars' height, the worse the codec's sound quality. If the black and crosshatched boxes on top of the bars don't overlap, then there is "statistically significant" evidence of audible impairment. The paper presented 14 graphs representing the test data.

These tests were performed twice, once in 1990 and again in 1991. After the first round of subjective testing in 1990, Swedish Radio "...came to the conclusion that none of the codecs could be generally accepted for use as distribution codecs by the broadcasters, at the stage of development by the time of the tests in July, 1990." They noted that ASPEC had the best sound quality, but had the greatest complexity and longest delay time (an important factor in broadcast applications). MUSICAM sounded slightly worse, but used simpler encoding and decoding hardware. This prompted SR to suggest that the ASPEC and MUSICAM development groups combine forces and produce a system with the best parts of each codec.

Although they regarded the level of impairment "small," Swedish Radio based their rejection on the belief that there was great potential for improving the codecs' performance. In addition, the realization that the selected system may well be the replacement for AM and FM broadcasting for the next 30 years or more weighed heavily in the decision. The paper used a metaphor to justify this cautious approach: "Artifacts that may be difficult to detect at a first listening will be more and more obvious as time goes by. It can be compared with somebody who moves into a new house. The first time he looks through the window he only sees the beautiful view. After a few days he detects a small flaw in the glass and from that moment he cannot look through the window without seeing the flaw."

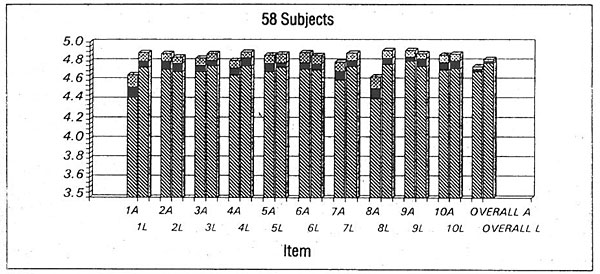

Swedish Radio's belief that the encoding algorithms could be improved were borne out in the second set of tests. The individual codecs performed much better in the 1991 tests, and there was very high correlation between the 1990 and 1991 results, validating the test methodology in SR's view. It was determined that "Both codecs have now reached a level of performance where they fulfill the EBU requirements for a distribution codec." Fig.2 shows the performance of the so-called "Layer III" codec (a combination of ASPEC and MUSICAM [subsequently to become ubiqutous as MP3—Ed.] ) at 128kb/s, the target data rate for DAB. (The musical selections were slightly different in the 1991 tests and don't correlate to the ten listings above, with the exception of #1, Suzanne Vega, footnote 3).

The panel discussion later that evening provided some, but not nearly enough, challenge to these bit-rate reduction systems. Michael Gerzon (footnote 4). noted that none of the musical selections used in evaluating the codecs was of naturally miked classical music; instead, all the program material was made with techniques that don't preserve spatial cues. Because this was the case, he argued, the listening tests would not reveal the codecs' possibly destructive effects on spatial information.

Gerzon prefaced these remarks by saying that older technology tends to preserve spatial information and newer technology doesn't, apparently a reference to the controversy over analog and digital. This was an excellent point, and one that I'm surprised Swedish Radio didn't consider, especially since the methodology and statistical analysis were so rigorous. This omission is even more glaring when one realizes that the low-level information thrown away by the codec is the information likely to contain spatial cues.

Other discussions focused on the effects of cascading codecs in several encode/decode cycles. This is an important issue; it is likely that broadcast signals will be transmitted and stored for later broadcast. Everyone on the panel agreed that multiple encode/decode cycles were a problem, producing "cumulative impairment." Two cascades were reportedly audible, with very rapid degradation associated with each successive generation.

One question not raised during the panel discussion was the effect of the playback system and environment on unmasking. All these systems produce huge objective errors that are presumably masked by the correctly coded wanted signal, much the way the music masks tape hiss in an analog tape recorder. If the playback system produces large amplitude errors—very common in car stereos—the horrible distortion hiding beneath the signal may be unmasked. These amplitude errors are often intentionally introduced by graphic equalizers.

When asked about professional uses of this technology, the MUSICAM designer said that professional and consumer systems should be very similar if not identical for ease of transfer: "There should be a very simple relationship between professional and consumer versions." He also asserted that these systems are currently "suitable for production and archiving." Karlheinz Brandenburg urged caution, saying that "more work was needed" before such systems are ready for making master recordings.

Christer Brewin, who conducted the subjective assessments, suggested that the drive to implement these very new systems was overly hasty: "...standardization has been rushed on us...and mistakes will occur."

Bob Stuart made an interesting point: Masking thresholds imply a known playback level. How well do these systems work over the very wide range of playback volumes encountered in the real world? This question went unanswered.

Bob's next question, however, produced a disturbing revelation. He asked "What happens when you run out of bits?" to encode the signal. He also asked if there was any thought to putting a flag in the data to indicate that the encoder had violated the masking model.

Karlheinz Brandenburg's reply was bluntly honest: "We run out of bits all the time. Nearly all the time we wish to have more bits." Yves-François Dehery added that "you do not follow the psychoacoustic rules" (the masking model) when you run out of bits.

The panel discussion ran well over the allotted time, an indication of the interest in low–bit-rate encoding and the realization that such techniques are inevitable in all areas of audio.

I'll conclude this report on the conference and the state of bit-rate reduction with a question from a member of the audience. His query is the very embodiment of the kind of thinking that led to the development of these frightening schemes in the first place. The gentleman asked if, because few bits are used in quiet passages, "it was possible to commercially exploit those unused data areas," particularly during "the silence between notes."—Robert Harley

Footnote 3: The proceedings of the conference, Images of Audio, which include the description of these subjective tests as well as Malcolm Hawksford's digital primer, are available from the Audio Engineering Society Inc., 60 E. 42nd St., New York, NY 10165-0075. Web: www.aes.org. The paper on subjective assessment of low–bit-rate encoders is far more detailed than described here.—Robert Harley

Footnote 4: Michael Gerzon is a theoretical mathematician as well as an audio designer. He has used his considerable analytical skills to argue the audiophile position on many occasions. At the 1991 Paris AES convention, he presented a paper that called into question the psychoacoustic masking models on which all these codecs are based. His contention is that when the error is correlated with the signal, the masking threshold is reduced by as much as 30dB. If he is correct, the present bit-rate reduction systems are fatally flawed. He also presented six papers at the New York AES convention in October 1991, one of which was entitled "Limitations of Double-Blind AB Testing."—Robert Harley

The editor's introduction incorrectly includes Monkey's Audio in the list of lossy compression methods. It is indeed lossless.

Rick.

The editor's introduction incorrectly includes Monkey's Audio in the list of lossy compression methods. It is indeed lossless.

You are correct. Brain fart on my part. I have removed it from the list of lossy codecs.

John Atkinson

Editor, Stereophile

An article on 20+year old lossy codecs, really? Is this the internet wayback machine?

For the good of high end audio, it's time to retire, Robert. Stereophile, you can do WAY better for an article on lossy codecs. The only danger is staying the course with Robert and his discussions of old codecs.

You wanna attract a larger high end market? Write about how LAME 3.99.5 V2 or better is audibly essentially transparent with music compared to lossless. Or write about OPUS 1.1 for low bitrate uses. Or how spending wisely on a system like foobar2000 or JRiver playing FLAC or 3.99.5 V0 with a $30 Behringer DAC with a $60 calibrated microphone and REW, using Blue Jeans cables, will allow more funds for better, really cool speakers, multiple subwoofers and room treatments.

An article on 20+year old lossy codecs, really? Is this the internet wayback machine?

My goal is eventually to have everything that was published in Stereophile available in our free on-line archives. I thought this article from 22 years ago would be of interest.

Write about how LAME 3.99.5 V2 or better is audibly essentially transparent with music compared to lossless. Or write about OPUS 1.1 for low bitrate uses.

With hard-drive prices at an all-time low and fat "pipes" becoming the domestic norm, why would anyone need their music encoded with a lossy codec at all, if you intend to listen to that music seriously? Despite advances in codec technology, there is yet to be a lossy codec that is transparent to all people at all times on all systems with all types of music.

So why not just use FLAC or Apple Lossless for your music library and forget about the possible sonic compromises of a lossy codec, other than where it makes something possible that would otherwise be impossible, such as listening to a live concert from the UK's BBC 3 via the Internet?

John Atkinson

Editor, Stereophile

Did you not read John's intro? Did you not notice the published date of Dec 1 1991? Robert has not written for stereophile for years. He is editor of another magazine.

Great article and essential reading for manufacturers and discerning consumers (i.e. audiophiles). Or as someone once said "Those who don't have a grasp on the history of their circumstances are likely to repeat some of its mistakes".

After all, as in the case of LAME 3.99xyz, how many times have we heard that since the beginning of digital? I convert my FLAC tracks to 320k MP3's for playing outdoors and on public transport, but I don't lose the originals. As Cookie Marenco has advised, best to get the earliest master possible, even in digital.

Interesting historical reference. But why is this "essential reading"? Nothing wrong with the contents but in 2013, I think it's fair to say that we've all experienced it (MP3, AAC, Ogg Vorbis...) and there's really no big "danger" here so let's not be too emotional about all of this.

I think many of us feel that 320kbps is indistinguishable qualitywise from lossless FLAC or ALAC but that's different from advocating wholesale conversion of music archives to a lossy format! It does however at least help put our expectations into perspective and when/if I need to go portable with my music, conversion to MP3 isn't hysterically treated as if it were some kind of "big deal". No great danger, no boogeyman, no monster.

This article talks about listening tests using MP3 at 128kbps. I think we're all quite aware of this bitrate as not being enough to ensure CD-equivalent sonic integrity and as far as I'm aware, no commercial service has been selling music at this resolution for years... I suppose it's still used for streaming radio, but again, no audio lover I know of would be mistaken with considering this bitrate as true high fidelity.

Better to keep putting attention on squashed dynamics due to the "loudness wars" than harp on the minimal effects of high bitrate lossy compression these days.

The reason why the attention would be prioritized on lossy files over loudness wars is because the former has permanence based on the reality of the files being archived. Worse I think is that the lossy files (on average) are more likely to be married with loudness adjustments than lossless files. Your experience could vary a lot, and I can't declare a hard-and-fast rule on any of this, but just ask the question around audiophile communities: "If loudness increases bother you, would you be more likely to find those loudness increases in lossy or lossless media?"

I see no difference over the years between loudness of MP3 vs. CD rips. If the original master is loud, it's loud. It's not like record companies release 2 versions - less compressed for CD release, louder for Amazon/iTunes as far as I can tell. It's the vinyl releases which tend to be less loud at least in a large part due to limitations of the technology.

Again, nobody's advocating archiving with a lossy process. MP3 works and serves its purpose with minimal if any perceivable sound degradation to human ears/brains at commonly used (256/320) bitrates in 2013.

Again, nobody's advocating archiving with a lossy process...

Back at the time this article was published, they were :-(

John Atkinson

Editor, Stereophile

In the '80s we knew that cassette was an easily audible downgrade to vinyl albums but many if not most of us were happy to record our albums and play the cassettes, not only in our cars, but often at home just to preserve the condition of the albums.

What we are trying to do is to capture a musical performance perfectly, store it permanetly and to reproduce it perfectly later on.

There have always been constraints on the process like size, cost, technological state of the art. In the digital domain, lossy compression was a response to some of those constraints like storage space and transmission bandwidth.

If you want lots of music in a small digital player or you want to stream music over limited bandwidth media, you are still need lossy compression of the digital source material. But as constraints fall away we can usher in a new era of ever increasing reproduction fidelity in the digital domain. Lossy compression will seem quaint in a few years.

I hope the recorders of the performance will step up and improve the quality of their source files. (Dynamic range, distortion, etc.) We as "audiophiles" can still pursue our pastime of reproducing those source files as accurately/musically as possible.

The lossy codec tests suffer from the same flaw that all audiology has since the 1930's when they started using a vacuum tube sine wave oscillator. The test subjects listen to music predominantly if not exclusively through speakers. This means their psycho-acoustic processing is acclimated to the temporal and spatial distortions of speakers. In fact, it appears from the description that the "Expert listeners" are people who listen to speakers for a living.

Professional acoustic musicians hear differently. In particular, they hear phase and have a much richer perception of acoustic space. The latest research indicates that they have far more inter-connection neurons especially traversing the Corpus Collossum, and that the increased neuro-genesis is driven by focused listening to acoustic sounds in childhood. These are the only "Expert Listeners" to music (as opposed to reproduction) in the industrialized world.

I have been working with conservatory trained musicians who have heard acoustic music at least two hours a day from childhood, and they agree that MP3, AAC and internet streaming CODECs are not merely detectable, but un-listenable. Even at 320K, I fatigue in under a half hour and take several hours to recover.

The information lost in bit reduction is largely the low level discrete multi-bounce echoes that illuminate the space where the recording took place. I take exception to the test tracks on that basis.

Two are processed pop recordings that started as mono close miked, deliberately colored center-electrode "vocal microphones" in a dead studio environment, two are artificial bass instruments with no acoustic reference. Of the transient signals, the fireworks were undoubtably recorded at a large distance which is effectively an acoustic peak limiter by absorbtion of high frequencies and phase shifting the remaining spectrum, and has no acoustic space reference like a rectangular room. Besides, nobody hears fireworks often enough to remember what they sound like. The Glockenspiel and Castanet tracks likely share some of these characteristics.

Modern trumpet typically is played legato with no transients to serve a time makers for echo decoding. This leaves the one jazz track which I don't know and male speech. If the male speech were recorded in near coincident stereo in a reverberant environment it may indicate something, but this unlikely.

Test signals should be pure acoustic recordings with no processing, complex and yet clear like a chamber orchestra with one player per part. Staccatto technique is essential to exercise the codec response to real acoustics, including staccatto acoustic bass instruments. Further, the test signal should be a voice with which the test subject is acclimated by recent experience (less than 24 hours). My favorite test signal is harpsichord because it is the closest acoustic equivalent to a Dirac impulse function and I hear it daily.