| Columns Retired Columns & Blogs |

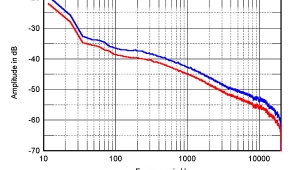

Thanks Jim, a nice synopsis of the reality of "scientific audio". There are indeed, unsound “scientific practice” slithering among the fields of sound (pun apologies). Some is intentional to disguise the truth; some is simply a naïve use of the tools. Such contamination has poisoned objectivity; snake venom abounds.

Once we are able to measure all the relevant parameters with sufficient precision (and report honestly) the mysticism would all but vanish; audio nirvana will become more accessible and snake oil will vaporize. The problem is to identify and confirm the key measurement parameters in the reproduction chain. This is not easy, and here we will need to do the tedious tasks of scientific listening tests. Fortunately, that approach minimizes the number of such tests, foregoing the need to “listen test” each piece of gear individually, in lieu of measurement. Someday… I am optimistic, yes.

WillW