| Columns Retired Columns & Blogs |

The Blind, the Double Blind, and the Not-So Blind

I was once in a sushi bar in Osaka; sitting next to me was a live abalone, stoically awaiting its fate. It stuck its siphon out of its shell, the waiter tapped the tip with a spoon, the siphon withdrew. Again the siphon appeared, again the waiter tapped it with a spoon, again it withdrew.

Footnote 1: Stereophile, Vol.14 No.12, December 1991, pp.70–75.

I was reminded of that abalone's blind persistence at Hi-Fi '94, Stereophile's recent High-End Hi-Fi Show in Miami, when the subject of blind listening tests once again raised its head in one of the "Ask the Editors" sessions (see Barry Willis's report on p.74).

Like Chuck Butler in this issue's "Letters," my Miami inquisitor insisted that audio reviews are only valid when performed blind; ie, when the subject is not aware of the identity of the component under test. Nevertheless, having designed, supervised, and taken part in a very large number of blind tests in the last 18 years, I have found that such tests are not only very difficult to properly organize, but, unless well-designed, are not very sensitive. Many such tests have indicated no significant identification of audible differences between components, even when such differences are later shown to exist.

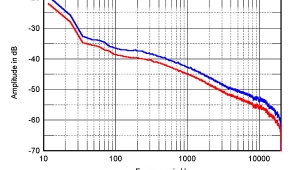

In a famous example, tests in the early 1990s run by the Swedish National Radio Company on behalf of the EBU investigated the degradation wrought on music signals by various audio data-reduction algorithms (footnote 1). After several stages of "Triple Stimulus, Hidden Reference, Double-Blind" tests, with 60 expert listeners and over 20,000 individual presentations, two algorithms were pronounced as being good enough for the needs of broadcasters. Yet when the late Bart Locanthi auditioned one of these "winners" (footnote 2), he immediately detected a low-level whistle at 1.5kHz that had gone unnoticed in the formal tests! (See next page.)

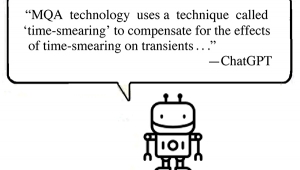

This story nicely illustrates a basic tenet of Scientific Method: that a null result from a blind test does not mean that there isn't a difference, only that if there was one, it could not be detected under the specific conditions of that experiment. Yet the people organizing such tests don't want to admit that their time has been wasted, nor do they like to admit that their results are meaningless.

As I've written before, this whole debate concerns belief systems, not facts. Whether you are a so-called "Objectivist" or a so-called "Subjectivist," the issue of blind testing represents the actual point where two opposed faiths clash. The latter camp tends to reject all "scientific" results—there was strong criticism recently in other high-end publications of Stereophile's inclusion of measurements in reviews—while Objectivists also pick and choose from the whole picture only that evidence which lies comfortably within their own belief structures. Even though they, like Mr. Butler, wrap themselves in the flag of "objectivity," they cast their own subjective veil over the truth—a truth that exists independently of the observers. I suspect that they fear living in a world where, say, amplifiers can be found to sound different from one another. For what else might then be audible?

How, then, can blind tests conceal small but real subjective differences? The answer is that they introduce additional uncontrolled variables that confuse and confound the results.

First, the subject doesn't directly judge the object under test—as in wine tasting or the testing of drugs—but only indirectly through its effect on an information-bearing, emotionally loaded stimulus: music. The choice of music for a test is far from trivial—as one subject said during our blind amplifier tests in 1989, "You have to care whether there is a difference or not." The very nature of music is also that it changes with time; you very rapidly lose your judgmental bearings in a blind test unless you use continuous test signals—pink noise, for example. Yet who listens to pink noise under normal conditions?

Second, the test involves an unnatural degree of stress. Some Objectivists correlate this "stress" with "fear of failure," but it's actually much more complex than that. Blind testing puts the subject in a listening state vastly different from that which is normal for listening to music (footnote 3)—how can this not be a confusing variable?

In a classic ABX test, for example, instead of perceiving the music as a whole, as is usual, the subject is performing the following task: Upon being presented with a piece of music, she has to make an arbitrary decision as to whether it's "A" or "B." The switch is then thrown, and she sets up two hypotheses: either the second is a repeat of the first, or it's different.

Let's assume that she plumps for the first. She now frantically listens for aural evidence that this hypothesis is correct; she may then hear the start of something that might show that it's incorrect, but then the switch is thrown. Again she listens for more evidence; but, again, before her arbitrary hypothesis is shown to be correct or not, the switch is thrown once more. The result is listener stress and confusion which, not surprisingly, randomize the results.

If such factors can be eliminated or allowed for, then blind tests have their uses: witness this issue's loudspeaker survey, which Tom Norton organized in a superbly effective manner. But the real matter for discussion is whether or not a difference, once heard, is of importance to an individual listener. Sonic differences may well exist, but if they're below the average listener's threshold of hearing, they might as well not exist. This thorny topic, however, must be left for a future "As We See It."—John Atkinson

Footnote 1: Stereophile, Vol.14 No.12, December 1991, pp.70–75.

Footnote 2: Stereophile, Vol.15 No.1, January 1992, p.69.

Footnote 3: See Robert Harley's "The Listeners' Manifesto," Stereophile, Vol.15 No.1, January 1992, p.111.

- Log in or register to post comments